International Workshop - Human Supervision and Control in

Engineering and Music - Kassel, Germany

Sept 21-24, 2001

Gestural Control of Music

Marcelo M. Wanderley

IRCAM - Centre Pompidou

1, Pl. Igor Stravinsky

75004 - Paris - France

mwanderley@acm.org

pdf version

Abstract

Digital musical instruments do not depend on physical constraints

faced by their acoustic counterparts, such as characteristics of tubes,

membranes, strings, etc. This fact permits a huge diversity of

possi-bilities

regarding sound production, but on the other hand strategies to design

and perform these new instruments need to be devised in order to provide

the same level of control subtlety available in acoustic instruments. In

this paper I review various topics related to gestural control of music

using digital musical instruments and identify possible new trends in this

domain.

Introduction

The evolution of computer music has brought to light a plethora of sound

synthesis methods available in general and inexpensive computer

platforms,

allowing a large community direct access to real-time computer-generated

sound.

Both signal and physical models have already been considered

as sufficiently mature to be used in concert situations, although much

research continues to be carried on in the subject, constantly bringing

innovative solutions and developments [8]

[88] [64].

On the other hand, input device technology that captures different human

movements can also be viewed as in an advanced stage [63]

[7], considering both non-contact

movements

and manipulation .

Specifically regarding manipulation, tactile and force feedback devices

for both non-musical

.

Specifically regarding manipulation, tactile and force feedback devices

for both non-musical and musical contexts have already been proposed [12]

and musical contexts have already been proposed [12] .

.

Therefore, the question of how to design and perform new computer-based

musical instruments - consisting of gesturally controlled, real time

computer-generated

sound - need to be considered in order to obtain similar levels of control

subtlety as those available in acoustic instruments.

This topic amounts to a branch of knowledge known as human-computer

interaction (HCI) .

In this context, various questions come to mind, such as:

.

In this context, various questions come to mind, such as:

-

Which are the specific constraints that exist in the musical context with

respect to general human-computer interaction?

-

Given the various contexts related to interaction in sound generating

systems,

what are the similarities and differences within these contexts

(interactive

installations , digital musical instrument manipulation , dance-music

interfaces

)?

-

How to design systems for these various musical contexts? Which system

characteristics are common and which are context specific?

Human-Computer Interaction and Music

Gestural control of computer generated sound can be seen as

a highly specialized branch of human-computer interaction (HCI)

involving the simultaneous control of multiple parameters,

timing,

rhythm,

and user training [62].

According

to A. Hunt and R. Kirk [39]:

In stark contrast to the commonly accepted choice-based nature

of many computer interfaces are the control interfaces for musical

instruments

and vehicles, where the human operator is totally in charge of the action.

Many parameters are controlled simultaneously and the human operator has

an overall view of what the system is doing. Feedback is gained not by

on-screen prompts, but by experiencing the moment-by-moment effect of each

action with the whole body.

Hunt and Kirk consider various attributes as characteristics of a real-time

multiparametric control systems [39, pg.

232,]. Some of these are:

-

There is no fixed ordering to the human-computer dialogue.

-

There is no single permitted set of options (e.g. choices from a menu)

but rather a series of continuous controls.

-

There is an instant response to the user's movements.

-

The control mechanism is a physical and multi-parametric device which must

be learned by the user until the actions become automatic.

-

Further practice develops increased control intimacy and thus competence

of operation.

-

The human operator, once familiar with the system, is free to perform other

cognitive activities whilst operating the system (e.g. talking while

driving

a car).

Interaction Context

Taking into account the specificities described above, let me consider

the various existing contexts in computer music

in computer music .

.

These different contexts are the result of the evolution electronic

technology allowing, for instance, a same input device to be used in

different

situations, e.g. to generate sounds (notes) or to control the temporal

evolution of a set of pre-recorded notes. If traditionally these two

contexts

corresponded to two separate roles in music - those of the performer and

the conductor, respectively - today not only the differences between

different

traditional roles have been minimized, but new contexts derived from

metaphors

created in human-computer interaction are now available in music.

One of these metaphors is drag and drop, that has been used in

[104] with a graphical drawing tablet

as the input device, a sort of gesturally controlled sequencer, whereas

in [80] and in [102]

the same tablet was used in the sense of a more traditional instrument.

Therefore, the same term interaction in a musical context may

mean [100]:

-

instrument manipulation (performer-instrument interaction )

in the context of real-time sound synthesis control.

-

device manipulation in the context of score-level control , e.g.

a conductor's baton used for indicating the rhythm to a previously

defined computer generated sequence [51]

[3] [48].

Wessel and Wright use the term dipping to designate

this context [104].

-

other interaction contexts related to traditional HCI interaction

styles,

such as drag and drop, scrubbing [104]

or navigation [90].

-

device manipulation in the context of post-production activities

, for instance in the case of gestural control of digital audio effects

or sound spatialisation.

or sound spatialisation.

-

interaction in the context of interactive multimedia

installations

(where one person or many people's actions are sensed in order to provide

input values for an audio/visual/haptic system).

But also, to a different extent:

-

interaction in the context of dance (dance/music

interfaces)

[15].

-

computer games , i.e., manipulation of a computer game input device

[22].

although in these two last cases the generation of sound is not necessarily

the primary goal of the interaction.

Music as Supervisory Control

Another way to consider the different contexts in music is to relate them

to supervisory control theory. For instance, Sheridan [81]

makes a parallel to supervisory control theory, where the notions of

zeroth,

first

and second order control correspond to different musical control

levels, i.e., biomechanics (performer gestures and feedback),

putting

notes together, and composing and conducting the synchronization

of musicians.

Gestural Control of Sound Synthesis

I will now focus on the the main interest of this paper and analyze the

situation of performer-digital musical instrument interaction .

The focus of this work can be summarized as expert interaction by means

of the use of input devices to control real-time sound synthesis

software.

.

The focus of this work can be summarized as expert interaction by means

of the use of input devices to control real-time sound synthesis

software.

The suggested strategy to approach this subject consists in dividing

the subject of gestural control of sound synthesis in four parts [98]:

-

Definition and typologies of gesture

-

Gesture acquisition and input device design

-

Mapping of gestural variables to synthesis variables

-

Synthesis algorithms

The goal is to show that all four parts are equally important to the design

of new digital musical instruments. This has been developed in detail in

[96].

Due to space constraints, I will focus here on item 2, gesture

acquisition

and input device design. Item 1 has been studied in collaboration with

Claude Cadoz in [14] and item 3 in

collaboration

with Andy Hunt and Ross Kirk in [40].

Synthesis algorithms have been explored in various articles and textbooks,

including [8], [72]

and also in the perspective of real-time control in [18].

Digital Musical Instruments

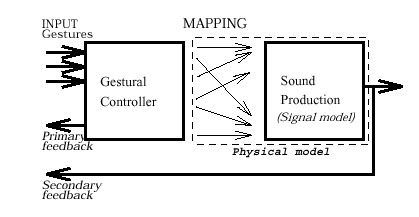

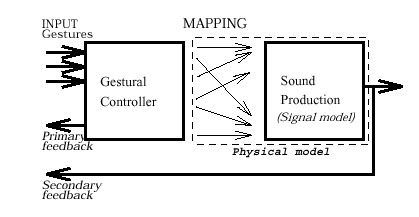

In this work, the term digital musical instrument (DMI) is used to represent an instrument that contains a separate gestural

interface

(or gestural controller unit) from a sound generation unit. Both units

are independent and related by mapping strategies [34]

[56] [76]

[79]. This is shown in figure 1.

is used to represent an instrument that contains a separate gestural

interface

(or gestural controller unit) from a sound generation unit. Both units

are independent and related by mapping strategies [34]

[56] [76]

[79]. This is shown in figure 1.

Figure 1: A Digital Musical Instrument representation.

The term gestural controller can be defined here as the input part of the DMI, where physical

interaction

with the player takes place. Conversely, the sound generation unit can

be seen as the synthesis algorithm and its controls. The mapping layer

refers to the liaison strategies between the outputs of the gestural

controller

and the input controls of the synthesis algorithm

can be defined here as the input part of the DMI, where physical

interaction

with the player takes place. Conversely, the sound generation unit can

be seen as the synthesis algorithm and its controls. The mapping layer

refers to the liaison strategies between the outputs of the gestural

controller

and the input controls of the synthesis algorithm .

.

This separation is impossible in the case of traditional acoustic

instruments,

where the gestural interface is also part of the sound production unit.

If one considers, for instance, a clarinet, the reed, keys, holes, etc.

are at the same time both the gestural interface (where the performer

interacts

with the instrument) and the elements responsible for the sound production.

The idea of a DMI is analogous to "splitting" the clarinet in a way where

one could separate these two functions (gestural interface and sound

generator)

and use them independently.

Clearly, this separation of the DMI into two independent units is

potentially

capable of extrapolating the functionalities of a conventional musical

instrument, the latter tied to physical constraints. On the other hand,

basic characteristics of existing instruments may be lost and/or difficult

to reproduce, such as tactile/force feedback.

Gesture and Feedback

In order to devise strategies concerning the design of new digital musical

instruments for gestural control of sound synthesis , it is essential

to analyze the characteristics of actions produced by expert

instrumentalists

during performance. These actions are commonly referred to as

gestures

in the musical domain. In order to avoid discussing all nuances of the

meaning of gesture, let me initially consider

performer gestures

as performer actions produced by the instrumentalist during

performance.

A detailed discussion is presented in [14] .

.

Performer Gestures

Instrumentalists simultaneously execute various types of gestures during

performance. Some of them are necessary for the production of sound [11],

others may not be clearly related to sound production [23]

[99], but are nevertheless present

in most highly-skilled instrumentalists' performances.

One can approach the study of gestures by either analyzing the possible

functions of a gesture during performance [69]

or by analyzing the physical properties of the gestures taking place [17].

By identifying gestural characteristics - functional, in a specific

context,

or physiological - one can ultimately gain insight into the design of

gestural

acquisition systems.

Regarding both approaches, it is also important to be aware of the

existing

feedback available to the performer, be it visual, auditory or

tactile-kinesthetic

. Feedback can also be considered depending on its characteristics, as:

-

Primary/secondary [92],

where primary feedback encompasses visual, auditory (clarinet key noise,

for instance) and tactile-kinesthetic feedback, and secondary feedback

relates to the sound produced by the instrument.

-

Passive/active [7], where

passive feedback relates to feedback provided through physical

characteristics

of the system (a switch noise, for instance) and active feedback is the

one produced by the system in response to a certain user action (sound

produced by the instrument).

Tactile-kinesthetic , or tactual [6]

feedback is composed by the tactile and proprioceptive senses [75].

Gestural Acquisition

Once the gesture characteristics have been analyzed, it is essential to

devise an acquisition system that will capture these characteristics

for further use in the interactive system.

In the case of performer - acoustic instrument interaction, this

acquisition

may be performed in three ways:

-

Direct acquisition , where one or various sensors are used to

monitor

performer's actions. The signals from these sensors present isolated basic

physical features of a gesture: pressure, linear or angular displacement,

and acceleration, for instance. A different sensor is usually needed to

capture each physical variable of the gesture.

-

Indirect acquisition , where gestures are isolated from the

structural

properties of the sound produced by the instrument [1]

[44] [68]

[26] [61].

Signal processing techniques can then be used in order to derive

performer's

actions by the analysis of the fundamental frequency of the sound, its

spectral envelope, its power distribution, etc.

-

Physiological signal acquisition, the analysis of physiological

signals, such as EMG [45] [65].

Commercial systems have been developed based on the analysis of muscle

tension and used in musical contexts [85]

[5] [86]

[49] [105].

Although capturing the essence of the movement, this technique is

hard to master since it may be difficult to separate the meaningful parts

of the signal obtained from physiological measurement.

Direct acquisition has the advantage of simplicity when compared to

indirect

acquisition, due to the mutual influence of different parameters present

in the resulting sound (i.e. instrument acoustics, room effect and

performer

actions). Nevertheless, due to the independence of the variables captured,

direct acquisition techniques may underestimate the interdependency of

the various variables obtained.

Direct Acquisition

Direct acquisition is performed by the use of different sensors to capture

performer actions. Depending on the type of sensors and on the combination

of different technologies in various systems, different movements may be

tracked.

Citing B. Bongers , a well-known alternate controller designer [7]:

Sensors are the sense organs of a machine. Sensors convert

physical energy (from the outside world) into electricity (into the machine

world). There are sensors available for all known physical quantities,

including the ones humans use and often with a greater range. For instance,

ultrasound frequencies (typically 40 kHz used for motion tracking) or light

waves in the ultraviolet frequency range.

Sensor Characteristics and Musical Applications

Some authors consider that most important sensor

characteristics

are: sensitivity, stability and repeatability [83].

Other important characteristic relates to the linearity and selectivity

of the sensor's output, it's sensitivity to ambient conditions, etc. P.

Garrett considers six descriptive parameters applicable to sensors

as [32]: accuracy,

error,

precision,

resolution,

span,

and range.

In general instrumentation circuits, sensors typically need to be both

precise and accurate, and present a reasonable resolution. In the musical

domain, it is often stressed that the choice of a transducer technology

matching a specific musical characteristic relates to human performance

and perception: for instance, mapping of the output of a sensor that is

precise but not very accurate to a variable controlling loudness may be

satisfactory, but if it is used to control pitch, its innacuracy will

probably

be more noticeable.

Various texts describe different sensors and transducer technologies

for general and musical applications, such as [32]

and [33] [7]

[28], respectively. The reader is

directed

to these texts for further information.

Analog to MIDI Conversion

For the case of gesture acquisition with the use of different sensors,

the signals obtained at the sensors outputs are usually available in an

analog format, basically in the form of voltage or current signals. In

order to be able to use these signals as computer inputs, they need to

be sampled and converted in a suitable format, usually MIDI (Musical

Instrument

Digital Interface) [42] or more advanced

protocols [29].

Various analog-to-MIDI converters have been proposed and are widely

available commercially. The first examples have been developed already

in the eighties [95] [84].

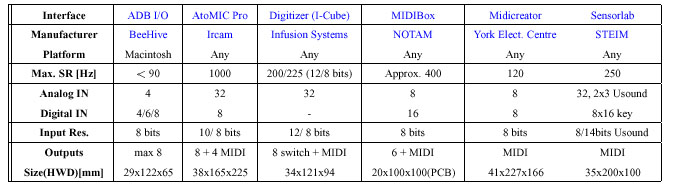

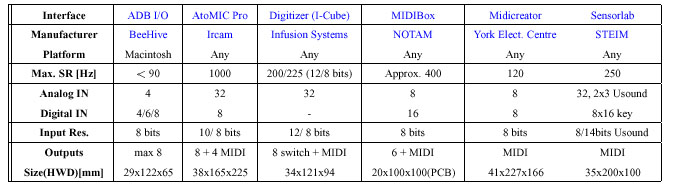

Comparison of Analog-to-MIDI interfaces

Concerning the various discussions on the advantages and drawbacks of the

MIDI protocol and its use [55], strictly

speaking, nothing forces someone to use MIDI or prevents the use of faster

or different protocols. It is interesting to notice that many existing

systems have used communication protocols other than MIDI in order to avoid

speed and resolution limitations. One such system is the TGR

(transducteur gestuel réctroactif, from ACROE ) [13].

I compare here six commonly available models of sensor-to-MIDI

interfaces in order to provide the reader an idea of their

differences .

Their basic characteristics are seen in table 1.

.

Their basic characteristics are seen in table 1.

Table 1: Comparison of 6 Analog-to-MIDI commercial

interfaces.

One can notice that interface characteristics may differ to a

significant

amount, but most models present comparable figures. As already pointed

out, the limiting factor regarding speed and resolution is basically the

specifications of the MIDI protocol, not the electronics involved in the

design.

Indirect Acquisition

As opposed to direct acquisition, indirect acquisition provides

information about performer actions from the evolution of structural

properties

of the sound being produced by an instrument. In this case, the only sensor

is a microphone, i.e., a sensor measuring pressure or gradient of pressure.

Due to the complexity of the information available in the instrument's

sound captured by a microphone, different real-time signal processing

techniques

are used in order to distinguish the effect of a performer's action from

environmental features, such as the influence of the acoustical properties

of the room.

Generically, one could identify basic sound parameters to be extracted

in real-time. P. Depalle, cites four parameters [98]:

-

Short-time energy, related to the dynamic profile of the signal,

indicates the dynamic level of the sound but also possible differences

of the instrument position related to the microphone.

-

Fundamental frequency, related to the sound's melodic profile, gives

information about fingering, for instance.

-

Spectral envelope, representing the distribution of sound partial

amplitudes, may give information about the resonating body of the

instrument.

-

Amplitudes, frequencies and phases of sound partials, that can alone

provide much of the information obtained by the previous parameters.

Obviously, in order to perform the analysis of the above or other

parameters

during indirect acquisition, it is important to consider the correct

sampling

of the signal. According to the Nyquist theorem, this frequency needs to

be at least twice as big as the maximum frequency of the signal to be

sampled.

Although one could reasonably consider that frequencies of performer

actions can be limited to few hertz, fast actions can potentially present

higher frequencies. A sampling frequency value typically proposed for

gestural

acquisition is 200 Hz [50]. Some

systems

may use higher values, from 300 to 1kHz [13].

Recently, researchers considered the ideal sampling frequency to be around

4 kHz [30] [29].

As a general figure, one can consider 1 kHz as sufficient for most

applications.

Several works on indirect acquisition systems have already been

presented.

They include both hybrid systems (using also sensors), such as the

hypercello

[46] and pure indirect systems, such

as the analysis of clarinet performances [68]

[26] and guitar [61].

Gestural Controllers

Once one or several sensors are assembled as part of a unique device, this

device is called an input device or a gestural controller .

.

As cited above, the gestural controller is the part of the

DMI

where physical interaction takes place. Physical interaction

here means the actions of the performer, be they body movements,

empty-handed

gestures or object manipulation, and the perception by the performer of

the instrument's status and response by means of tactile-kinesthetic,

visual

and auditory senses.

Due to the large range of human actions to be captured by the

controller and depending on the interaction context where it will be used (cf. section

1.1.1),

its design may vary from case to case. In order to analyze the various

possibilities, we propose a three-tier classification of existing

controller

designs as [98] [7]:

and depending on the interaction context where it will be used (cf. section

1.1.1),

its design may vary from case to case. In order to analyze the various

possibilities, we propose a three-tier classification of existing

controller

designs as [98] [7]:

-

Instrument-like controllers , where the input device design tends

to reproduce each feature of an existing (acoustic) instrument in detail.

Many examples can be cited, such as electronic keyboards, guitars,

saxophones,

marimbas, and so on.

A sub-division of this class of gestural controllers would be that

of

Instrument-inspired controllers , that although largely inspired

by existing instrument's design, are conceived for another use. One example

is the

SuperPolm violin, developed by S. Goto , A. Terrier

, and P. Pierrot [66] [35],

where the input device is loosely based on a violin shape, but is used

as a general device for the control of granular synthesis.

-

Augmented Instruments , also called Hybrid Controllers, are

instruments augmented by the addition of extra sensors [4]

[46]. Commercial augmented instruments

included the Yamaha Disklavier , used in for instance in pieces by J.-C.

Risset [71] [70].

Other examples include the flute [67]

[107] and the trumpet [21]

[41] [89],

but any existing acoustic instrument may be instrumented to different

degrees

by the additions of sensors.

-

Alternate controllers , whose design does not follow an established

instrument's one. Some examples include the Hands [95],

graphic drawing tablets [80],

etc. For instance, a gestural controller using the shape of the oral cavity

has been proposed in [60].

For instrument-like controllers, although representing a simplified

(first-order)

model of the acoustic instrument, many of the gestural skills developed

by the performer on the acoustic instrument can be readily applied to the

controller. Conversely, for a non-expert performer, these controllers

present

roughly the same constraints as those of an acoustic instrument ,

technical difficulties inherent to the former may have to be overcome by

the non-expert performer.

,

technical difficulties inherent to the former may have to be overcome by

the non-expert performer.

Alternate controllers, on the other hand, allow the use of other gesture

vocabularies than those of acoustic instrument manipulation, these being

restricted only by the technology choices in the controller design, thus

allowing non-expert performers the use of these devices. Even so,

performers

still have to develop specific skills for mastering these new gestural

vocabularies [95].

An Analysis of Existing Input Devices

A reasonable number of input devices have been proposed to perform

real-time control of music [73] [63],

most of them resulting from composer's/player's idiosyncratic approaches

to personal artistic needs. These interfaces, although often revolutionary

in concept, have mostly remained specific to the needs of their inventors.

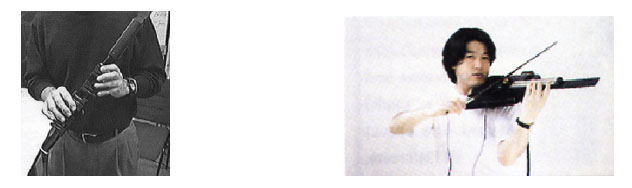

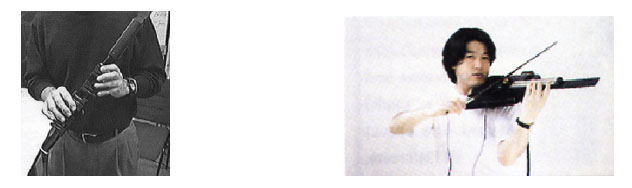

Four examples of gestural controllers are shown in figures 2

and 3.

The advantages and drawbacks of each controller type depends mostly

on the user goals and background, but unfortunately systematic means of

evaluating gestural controllers are not commonly available.

Figure 2: Left: Joseph Butch Rovan holding

a wx7 , an instrument-like (saxophone) controller by Yamaha.

Right:

Suguru Goto and the SuperPolm , an instrument-inspired

controller

(violin).

Figure 3: Left: Marc Battier manipulating the Pacom

, by Starkier and Prevot . Right: Jean-Philippe Viollet

using the WACOM graphic tablet. Both devices are considered as

alternate

controllers.

From an engineering point of view, it is important to propose means

to compare existing designs in order to evaluate their strong and weak points and eventually come up

with guidelines for the design of new input devices.

in order to evaluate their strong and weak points and eventually come up

with guidelines for the design of new input devices.

Some authors consider that these new devices, designed according to

ergonomical and cognitive principles, could eventually become general tools

for musical control [93] [57]

[87] [58] .

.

Design Rationale: Engineering versus Idiosyncratic approaches

The use of pure engineering/ergonomical approaches can be challenged by

the comparison with the evolution of input device design in human-computer

interaction. In fact, researcher W. Buxton [10]

considers HCI and ergonomics as failed sciences. He argues that

although a substantial volume of literature on input device

evaluation/design

in these two areas has already been proposed, current available devices

have benefit little from all this knowledge and therefore major innovations

are not often proposed.

The problem with both points of view - engineering versus

idiosyncratic

- seems to be their application context. Although one can always question

the engineering approach by stressing the role of creativity against

scientific

design [20], the proposition of scientific

methodologies is also a key factor for the evaluation of existing

gestural controllers.

Conversely, engineering methodologies, shall not prevent the use of

creativity in design, although this can be a side effect of structured

design rationales. But without a common basis for evaluation, the

differentiation

between input devices and simple gadgets turns out to be hazardous.

As stated before, the design of a new input device for musical

performance

is generally directed towards the fulfillment of specific and sometimes

idiosyncratic musical goals, but is

always based on an engineering

corpus of knowledge. This technical background allows the choice of

transducer

technologies and circuit designs that implement the interface needed to

perform the initial musical goals .

.

Therefore, although the final development goals are musical and

consequently

any criticism of these goals turns into a question related to aesthetical

preferences, their design is based on engineering principles that can,

and

need, to be evaluated and compared. This evaluation is essential, for

instance, in the selection of existing input devices for performing

different

tasks [80], but it can also be useful

in the identification of promising new opportunities for the design of

novel input devices [16].

Gestural Controller Design

It may also be useful to propose guidelines for the design of new input

devices based on knowledge from related fields, such as experimental

psychology,

physiology and human-computer interaction. Taking the example of the

research

in human-computer interaction, many studies have been carried out on the

design and evaluation of input devices for general (non-expert)

interaction.

The most important goal in these studies is the improvement of accuracy

and/or time response for a certain task, following the relationship, known

as the Fitts' law [47].

Also, standard methodologies for tests have been proposed and generally

consist of pointing and/or dragging tasks, where the size and distance

between target squares are used as tests parameters.

In 1994, R. Vertegaal and collaborators have presented a

methodology,

derived from standard HCI tests, that addressed the comparison of input

devices in a timbre navigation task [90]

[92]. Although pioneering in the

field, the methodology used consisted of a pure selection (pointing and

acquisition) task, i.e., the context of the test was a navigation in a

four parameter timbral space [91],

not a traditional musical context in the sense of instrumental performance.

In 1996, Vertegaal et al. [93]

[87] proposed an attempt to

systematically

relate an hypothetical musical function (dynamic - absolute or relative

- or static) to a specific sensor technology and to the feedback available

with this technology. This means that certain sensor technologies may

outperform

others for a specific musical function. The interest of this work is that

it allows a designer to select a sensor technology based on the proposed

relationships, thus reducing the need for idiosyncratic solutions. An

evaluation

of this methodology is presented in [102].

Another attempt to address the evaluation of well-known HCI

methodologies

and their possible adaptation to the musical domain was presented in [62].

Although one cannot expect to use methodologies from other fields directly

into the musical domain, at least the analysis of similar developments

in better established fields may help finding directions suitable for the

case of computer music.

Mapping of Gestural Variables to Synthesis Inputs

Once gesture variables are available either from independent sensors or

as a result of signal processing techniques in the case of indirect

acquisition,

one then needs to relate these output variables to the available synthesis

input variables.

Depending on the sound synthesis method to be used, the number and

characteristics

of these input variables may vary. For signal model methods, one may have

a) amplitudes, frequencies and phases of sinusoidal sound partials for

additive synthesis; b) an excitation frequency plus each formant's center

frequency, bandwidth, amplitude and skew for formant synthesis; c) carrier

and modulation coefficients (c:m ratio) for frequency modulation (FM)

synthesis,

etc.

It is clear that the relationship between the gestural variables and

the synthesis inputs available is far from obvious. How does one relate

a gesture to a c:m ratio?

For the case of physical models, the available variables are usually

the input parameters of an instrument, such as blow pressure, bow velocity,

etc. In a sense, the mapping of gestures to the synthesis inputs is more

evident, since the relation of these inputs to the algorithm already

encompasses

the multiple dependencies based on the physics of the particular

instrument.

Systematic Study of Mapping

The systematic study of mapping is an area that is still underdeveloped.

Only a few works have been proposed that analyze the influence of mapping

on digital musical instrument performance or suggested ways to define

mappings

to relate controller variables to synthesis inputs. Examples of works

include:

[9], [43],

[27], [106],

[19], [54],

[76], [59],

[101], [38],

[31], [39],

[53], and [18].

A detailed review of the existing literature on mapping was presented

in [14], and in [96].

Discussions have also been carried on on the role of mapping in computer

music in the Working Group on Interactive Systems and Instrument Design

in Music, at ICMA and EMF ,

where a complete bibliography on the subject is available, as well as

suggestions

on basic readings and links to existing research.

,

where a complete bibliography on the subject is available, as well as

suggestions

on basic readings and links to existing research.

Mapping Strategies

Although simple one-to-one or direct mappings are by far

the most commonly used, other mapping strategies can be used.

For instance, we have shown that for the same gestural controller and

synthesis algorithm, the choice of mapping strategy may be the determinant

factor concerning the expressivity of the instrument [76].

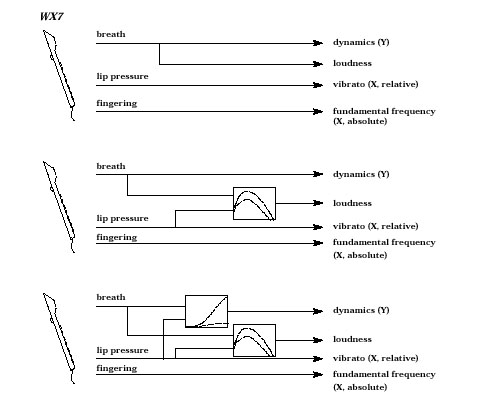

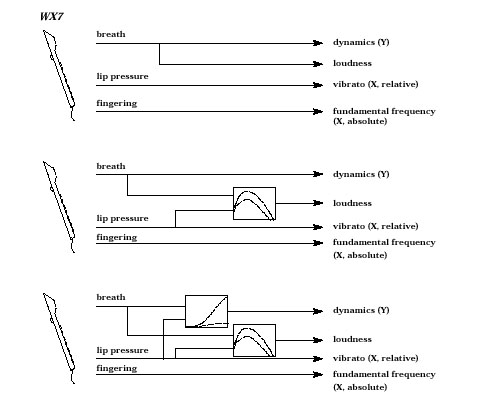

Figure 4: Examples of different mapping strategies for the

simulation

of instrumental performance [76].

The main idea was to challenge the main directions in digital musical

instrument design, i.e. input device design and research on different

synthesis

algorithms, and focus on the importance of different mappings using

off-the-shelf

controllers and standard synthesis algorithms.

Different mapping strategies were applied to the simulation of

traditional

acoustic single reed instruments using the controller, based on the actual

functioning of the single reed. The three basic strategies were suggested:

one-to-one,

one-to-many

and many-to-one, and were used in order to propose different

mappings,

from a simple one-to-one to complex mappings simulating the physical

behavior

of the clarinet's reed. This is shown in figure 4.

We could show, from the experience of single reed instrument performers

that tried the system that the use of different mappings did

influence

the expressivity obtained during the playing, without any modifications

in either the input device or the synthesis algorithm.

Mapping for General Musical Performance

The example above applying different mapping strategies to the simulation

of instrumental performance has derived from the actual physical behavior

of the acoustic instrument. But in the case of an alternate digital musical

instrument, the possible mapping strategies to be applied are far from

obvious, since no model of the mappings strategies to be used is available.

Even so, it can be demonstrated that complex mappings may influence user

performance for the manipulation of general input devices in a musical

context.

An interesting work by A. Hunt and R. Kirk [38]

[37] [39]

presented a study on the influence over time of the choice of mapping

strategy

on subject performance in real-time musical control tasks. User performance

was measured over a period of several weeks and showed that complex mapping

strategies used with the multi-parametric instrument allowed better

performance

than simpler mappings for complex tasks (various parameters changing

simultaneously)

and also that performance with complex mapping strategies improved over

time.

A Model of Mapping as two Independent Layers

Mapping can be implemented as a single layer [9]

[19]. In this case, a change of either

the gestural controller or synthesis algorithm would mean the definition

of a different mapping.

One proposition to overcome this situation is the definition of mapping

as two independent layers: a mapping of control variables to

intermediate

parameters and a mapping of intermediate parameters to synthesis

variables [101].

This means that the use of different gestural controllers would

necessitate

the use of different mappings in the first layer, but the second layer,

between intermediate parameters and synthesis parameters, would remain

unchanged. Conversely, changing the synthesis method involves the

adaptation

of the second layer, considering that the same abstract parameters can

be used, but does not interfere with the first layer, therefore being

transparent

to the performer.

The definition of those intermediate parameters or an intermediate

abstract

parameter layer can be based on perceptual variables such as timbre,

loudness

and pitch, but can be based on other perceptual characteristics of sounds

[103] [52]

[31] or have no relationship to

perception,

being then arbitrarily chosen by the composer or performer [101].

Developments in Sound Synthesis Methods

On the other extreme of current trends regarding the digital musical

instruments,

various developments on sound synthesis methods have been proposed, among

them methods for both physical and signal models .

Physical models are specially useful for a realistic simulation of a

given acoustic instrument and today models of various instruments exist

[82] and are commercially available.

Disadvantages of physical models include the lack of analysis methods,

difficulties regarding the continuous morphing between different models

and most of all the complexity regarding the real-time performance using

these models, that correspond to the difficulties encountered with the

real acoustic instrument.

Signal models, specifically additive synthesis [74],

present the advantage of having well-developed analysis tools that allow

the extraction of parameters corresponding to a given sound. Therefore,

the morphing of parameters from different sounds can lead to continuous

transformations between different instruments. Although not necessarily

reproducing the full behavior of the original instrument, the flexibility

allowed by signal models may be interesting for the prototyping of control

strategies since the mapping is left to the instrument designer.

Conclusions

This paper has critically commented on various topics related to real-time,

gesturally controlled computer-generated sound.

I have discussed the specificities of the interaction between a human

and a computer in various musical contexts. These contexts represent

different

interaction metaphors for music/sound control and cannot be underestimated

since they define the level of interaction with the computer.

I have then focused on the specific case of real-time gestural control

of sound synthesis, and presented and discussed the various constituent

parts of a digital musical instrument.

I have claimed that a balanced analysis of these constituent parts is

an essential step towards the design of new instruments, although current

developments many times tend to focus either on the design of new gestural

controllers or on the proposition of different synthesis algorithms.

Suggestions of Future Research Directions

Finally, I present suggestions of topics for future research directions

that I believe can eventually lead to new directions on the design and

performance of digital musical instruments.

-

Concerning gestural acquisition, the use of indirect acquisition through

the analysis of the sound produced by acoustic instruments may help the

design of new gestural controllers. Although various works have been

proposed

in this direction, a general overview showing the current state of the

research in this area was missing and was presented in [96].

It can serve as a basis for future developments in the field.

-

The development of evaluation techniques for multiparametric expert user

tasks is a difficult problem that may improve our knowledge of the actual

motor behavior of performers in these circumstances. The definition of

tasks taking into account different musical contexts may also help the

comparison of existing devices and suggest design methodologies for the

developments of new digital musical instruments.

-

The study of mapping strategies is, in my opinion, quite underdeveloped.

We have been pioneers in showing the isolated effect of mapping on digital

musical instrumental design and another important work recently carried

out at the University of York has pushed further our knowledge about

general

multiparametric control situations and the influence of mapping on

performance.

From these studies there seems to exist a huge unexplored potential on

the definition of mapping strategies for different contexts.

Acknowledgements

This work is a result of a PhD thesis recently completed at IRCAM - Centre

Pompidou, in Paris, thanks to funding through a doctoral grant from CNPq,

Brazil.

Thanks to my advisors Philippe Depalle and Xavier Rodet, and to Marc

Battier, Claude Cadoz, Shlomo Dubnov, Andy Hunt, Fabrice Isart, Ross Kirk,

Nicola Orio, Joseph Butch Rovan, Norbert Schnell,

Marie-Hélène

Serra, Hugues Vinet, and Jean-Philippe Viollet, for various discussions,

ideas, and comments throughout my stay at IRCAM.

This paper contains material already published by the author and

collaborators.

These are dully referenced throughout the text.

References

-

1

-

N. Bailey, A. Purvis, I. W. Bowler, and P. D. Manning. Applications

of the Phase Vocoder in the Control of Real-Time Electronic Musical

Instruments.

Interface,

22:259-275, 1993.

-

2

-

M. Battier.

Les Musiques Electroacoustiques et l'environnement

informatique. PhD thesis, University of Paris X - Nanterre, 1981.

-

3

-

R. Boie, M. V. Mathews, and A. Schloss. The Radio Drum as a

Synthesizer

Controller. In Proc. of the 1989 International Computer Music

Conference.

San Francisco, Calif.: International Computer Music Association, pages

42-45, 1989.

-

4

-

B. Bongers. The use of Active Tactile and Force Feedback in Timbre

Controlling Electronic Instruments. In Proc. of the 1994 International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 171-174, 1994.

-

5

-

B. Bongers. An Interview with Sensorband.

Computer Music J.,

22(1):13-24, 1998.

-

6

-

B. Bongers. Tactual Display of Sound Properties in Electronic Musical

Instruments. Displays, 18:129-133, 1998.

-

7

-

B. Bongers.

Trends in Gestural Control of Music, chapter Physical

Interfaces in the Electronic Arts. Interaction Theory and Interfacing

Techniques

for Real-time Performance. Ircam - Centre Pompidou, 2000.

-

8

-

G. Borin, G. dePoli, and A. Sarti.

Musical Signal Processing,

chapter Musical Signal Synthesis, pages 5-30. Swets and Zeitlinger,

1997.

-

9

-

I. Bowler, A. Purvis, P. Manning, and N. Bailey. On Mapping N

Articulation

onto M Synthesiser-Control Parameters. In Proc. of the 1990

International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 181-184, 1990.

-

10

-

W. Buxton.

Trends in Gestural Control of Music, chapter Round

Table. Ircam - Centre Pompidou, 2000.

-

11

-

C. Cadoz. Instrumental Gesture and Musical Composition. In Proc.

of the 1988 International Computer Music Conference. San Francisco, Calif.:

International Computer Music Association, pages 1-12, 1988.

-

12

-

C. Cadoz, L. Lisowski, and J. L. Florens. A Modular Feedback Keyboard

Design. Computer Music J., 14(2):47-56, 1990.

-

13

-

C. Cadoz and C. Ramstein. Capture, Repréesentation and

"Composition"

of the Instrumental Gesture. In Proc. of the 1990 International Computer

Music Conference. San Francisco, Calif.: International Computer Music

Association,

pages 53-56, 1990.

-

14

-

C. Cadoz and M. M. Wanderley.

Trends in Gestural Control of Music,

chapter Gesture-Music. Ircam - Centre Pompidou, 2000.

-

15

-

A. Camurri. Interactive Dance/Music Systems. In Proc. of the 1995

International Computer Music Conference. San Francisco, Calif.:

International

Computer Music Association, pages 245-252, 1995.

-

16

-

S. Card, J. Mackinlay, and G. Robertson. A Morphological Analysis

of the Design Space of Input Devices.

ACM Transactions on Information

Systems, 9(2):99-122, 1991.

-

17

-

I. Choi. Cognitive Engineering of Gestural Primitives for Multi-Modal

Interaction in a Virtual Environment. In Proc. IEEE International

Conference

on Systems, Man and Cybernetics (SMC'98), pages 1101-1106, 1998.

-

18

-

I. Choi.

Trends in Gestural Control of Music, chapter Gestural

Primitives and the Context for Computational Processing in an Interactive

Performance System. Ircam - Centre Pompidou, 2000.

-

19

-

I. Choi, R. Bargar, and C. Goudeseune. A Manifold interface for a

high dimensional control space. In Proc. of the 1995 International

Computer

Music Conference. San Francisco, Calif.: International Computer Music

Association,

pages 385-392, 1995.

-

20

-

P. Cook. Principles for Designing Computer Music Controllers. In

Proc.

of the NIME Workshop, CHI 2001, 2001.

-

21

-

P. Cook, D. Morril, and J.-O. Smith. A MIDI Control and Performance

System for Brass Instruments. In Proc. of the 1993 International

Computer

Music Conference. San Francisco, Calif.: International Computer Music

Association,

pages 130-133, 1993.

-

22

-

A. de Ritis. Senses of Interaction: What does Interactivity in Music

mean anyway? Focus on the Computer Game Industry. In Proc. of the

Society

for Artificial Intelligence and the Simulation of Behavior Conference,

London, 2001.

-

23

-

F. Delalande. La gestique de Gould. In Glenn Gould Pluriel,

pages 85-111. Louise Courteau, éditrice, inc., 1988.

-

24

-

P. Depalle, S. Tassart, and M. M. Wanderley. Instruments Virtuels.

Résonance,

(12):5-8, Sept. 1997.

-

25

-

A. J. Dix, J. Finlay, G. Abowd, and R. Beale.

Human-Computer Interaction.

Prentice Hall, second edition, 1998.

-

26

-

E. B. Egozy. Deriving Musical Control Features from a Real-Time

Timbre

Analysis of the Clarinet. Master's thesis, Massachusetts Institut of

Technology,

1995.

-

27

-

S. Fels.

Glove Talk II: Mapping Hand Gestures to Speech Using

Neural Networks. PhD thesis, University of Toronto, 1994.

-

28

-

E. Flety.

Trends in Gestural Control of Music, chapter 3D

Gesture Acquisition Using Ultrasonic Sensors. Ircam - Centre Pompidou,

2000.

-

29

-

A. Freed, R. Avizienis, T. Suzuki, and D. Wessel. Scalable

Connectivity

Processor for Computer Music Performance Systems. In Proc. of the 2000

International Computer Music Conference. San Francisco, Calif.:

International

Computer Music Association, 2000.

-

30

-

A. Freed and D. Wessel. Communication of Musical Gesture using the

AES/EBU Digital Audio Standard. In Proc. of the 1998 International

Computer

Music Conference. San Francisco, Calif.: International Computer Music

Association,

pages 220-223, 1998.

-

31

-

G. Garnett and C. Gouseseune. Performance Factors in Control of

High-Dimensional

Spaces. In Proc. of the 1999 International Computer Music Conference.

San Francisco, Calif.: International Computer Music Association, pages

268-271, 1999.

-

32

-

P. H. Garrett.

Advanced Instrumentation and Computer I/O Design.

Real-time System Computer Interface Engineering. IEEE Press, 1994.

-

33

-

N. Gershenfeld and J. Paradiso. Musical Applications of Electric

Field Sensing. Computer Music J., 21(2):69-89, 1997.

-

34

-

S. Gibet.

Codage, Représentation et Traitement du Geste

Instrumental. PhD thesis, Institut National Polytechnique de Grenoble,

1987.

-

35

-

S. Goto. The Aesthetics and Technological Aspects of Virtual Musical

Instruments: The Case of the SuperPolm MIDI Violin.

Leonardo Music Journal,

9:115-120, 1999.

-

36

-

V. Hayward and O. R. Astley.

Robotics Research: The 7th International

Symposium, chapter Performance Measures for Haptic Interfaces, pages

195-207. Springer Verlag, 1996.

-

37

-

A. Hunt.

Radical User Interfaces for Real-time Musical Control.

PhD thesis, University of York, 1999.

-

38

-

A. Hunt and R. Kirk. Radical User Interfaces for Real-Time Control.

In Euromicro 99 Conference, Milan, 1999.

-

39

-

A. Hunt and R. Kirk.

Trends in Gestural Control of Music,

chapter Mapping Strategies for Musical Performance. Ircam - Centre

Pompidou,

2000.

-

40

-

A. Hunt, M. M. Wanderley, and R. Kirk. Towards a Model of Mapping

Strategies for Instrumental Performance. In Proc. of the 2000

International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 209-212, 2000.

-

41

-

J. Impett. A Meta-Trumpet(er). In Proc. of the 1994 International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 147-150, 1994.

-

42

-

International MIDI Association. MIDI Musical Instrument Digital

Interface

Specification 1.0. North Hollywood: International MIDI Association,

1983.

-

43

-

M. Lee and D. Wessel. Connectionist Models for Real-Time Control

of Synthesis and Compositional Algorithms. In Proc. of the 1992

International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 277-280, 1992.

-

44

-

C. Lippe. A Composition for Clarinet and Real-Time Signal Processing:

Using Max on the IRCAM Signal Processing Workstation. In 10th Italian

Colloquium on Computer Music, pages 428-432, 1993.

-

45

-

H. Lusted and B. Knapp. Controlling Computer with Neural Signals.

Scientific

American, pages 58-63, 1996.

-

46

-

T. Machover. Hyperinstruments - A Progress Report 1987 - 1991.

Technical

report, Massachusetts Institut of Technology, 1992.

-

47

-

I. S. MacKenzie and W. Buxton. Extending Fitts' Law to Two

Dimensional

Tasks. In Proc. Conf. on Human Factors in Computing Systems

(CHI'92),

pages 219-226, 1992.

-

48

-

T. Marrin and J. Paradiso. The Digital Baton: A Versatile Performance

Instrument. In Proc. of the 1997 International Computer Music

Conference.

San Francisco, Calif.: International Computer Music Association, pages

313-316.

-

49

-

T. Marrin-Nakra.

Trends in Gestural Control of Music, chapter

Searching for Meaning in Gestural Data: Interpretive Feature Extraction

and Signal Processing for Affective and Expressive Content. Ircam - Centre

Pompidou, 2000.

-

50

-

M. V. Mathews and G. Bennett. Real-time synthesizer control.

Technical

report, IRCAM, 1978. Repport 5/78.

-

51

-

M. V. Mathews and F. R. Moore. GROOVE - A Program to Compose, Store

and Edit Functions of Time.

Communications of the ACM, 13(12):715-721,

December 1970.

-

52

-

E. Métois.

Musical Sound Information - Musical Gestures

and Embedding Systems. PhD thesis, Massachusetts Institut of

Technology,

1996.

-

53

-

P. Modler.

Trends in Gestural Control of Music, chapter Neural

Networks for Mapping Gestures to Sound Synthesis. Ircam - Centre Pompidou,

2000.

-

54

-

P. Modler and I. Zannos. Emotional Aspects of Gesture Recognition

by a Neural Network, using Dedicated Input Devices. In Proc. KANSEI

- The Technology of Emotion Workshop, 1997.

-

55

-

F. R. Moore. The Disfunctions of MIDI. In Proc. of the 1987

International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 256-262, 1987.

-

56

-

A. Mulder. Virtual Musical Instruments: Accessing the Sound Synthesis

Universe as a Performer. In Proceddings of the First Brazilian Symposium

on Computer Music, 1994.

-

57

-

A. Mulder.

Design of Gestural Constraints Using Virtual Musical

Instruments. PhD thesis, School of Kinesiology, Simon Fraser

University,

Canada, 1998.

-

58

-

A. Mulder.

Trends in Gestural Control of Music, chapter Towards

a Choice of Gestural Constraints for Instrumental Performers. Ircam -

Centre

Pompidou, 2000.

-

59

-

A. Mulder, S. Fels, and K. Mase. Empty-handed Gesture Analysis in

Max/FTS. In Proc. KANSEI - The Technology of Emotion Workshop,

1997.

-

60

-

N. Orio. A Gesture Interface Controlled by the Oral Cavity. In

Proc.

of the 1997 International Computer Music Conference. San Francisco, Calif.:

International Computer Music Association, pages 141-144, 1997.

-

61

-

N. Orio. The Timbre Space of the Classical Guitar and its

Relationship

with the Plucking Techniques. In Proc. of the 1999 International

Computer

Music Conference. San Francisco, Calif.: International Computer Music

Association,

pages 391-394, 1999.

-

62

-

N. Orio, N. Schnell, and M. M. Wanderley. Input Devices for Musical

Expression: Borrowing Tools from HCI. In Workshop on New Interfaces

for Musical Expression - ACM CHI01, 2001.

-

63

-

J. Paradiso. New Ways to Play: Electronic Music Interfaces.

IEEE

Spectrum, 34(12):18-30, 1997.

-

64

-

G. Peeters and X. Rodet. Non-Stationary Analysis/Synthesis using

Spectrum Peak Shape Distortion, Phase and Reassignement. In Proceedings

of the International Congress on Signal Processing Applications Technology

- ICSPAT, 1999.

-

65

-

R. Picard.

Affective Computing. MIT Press, 1997.

-

66

-

P. Pierrot and A. Terrier. Le violon midi. Technical report, IRCAM,

1997.

-

67

-

D. Pousset. La Flute-MIDI, l'histoire et quelques applications.

Mémoire

de Maîtrise, 1992. Université Paris-Sorbonne.

-

68

-

M. Puckette and C. Lippe. Getting the Acoustic Parameters from a

Live Performance. In 3rd International Conference for Music Perception

and Cognition, pages 328-333, 1994.

-

69

-

C. Ramstein.

Analyse, Représentation et Traitement du Geste

Instrumental. PhD thesis, Institut National Polytechnique de Grenoble,

December 1991.

-

70

-

J. C. Risset. Évolution des outils de création sonore.

In Interfaces Homme-Machine et Création Musicale, pages

17-36.

Hermes Science Publishing, 1999.

-

71

-

J. C. Risset and S. Van Duyne. Real-Time Performance Interaction

with a Computer-Controlled Acoustic Piano.

Computer Music J., 20(1):62-75,

1996.

-

72

-

C. Roads.

Computer Music Tutorial. MIT Press, 1996.

-

73

-

C. Roads.

Computer Music Tutorial, chapter Musical Input Devices,

pages 617-658. The MIT Press, 1996.

-

74

-

X. Rodet and P. Depalle. A New Additive Synthesis Method using

Inverse

Fourier Transform and Spectral Envelopes. In Proc. of the 1992

International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 410-411, 1992.

-

75

-

J. P. Roll.

Traité de Psychologie Expérimentale,

chapter Sensibilités cutanées et musculaires. Presses

Universitaires

de France, 1994.

-

76

-

J. Rovan, M. M. Wanderley, S. Dubnov, and P. Depalle. Instrumental

Gestural Mapping Strategies as Expressivity Determinants in Computer Music

Performance. In Proceedings of the Kansei - The Technology of Emotion

Workshop, Genova - Italy, Oct. 1997.

-

77

-

J. Ryan. Some Remarks on Musical Instrument Design at STEIM.

Contemporary

Music Review, 6(1):3-17, 1991.

-

78

-

J. Ryan. Effort and Expression. In Proc. of the 1992 International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 414-416, 1992.

-

79

-

S. Sapir. Interactive Digital Audio Environments: Gesture as a

Musical

Parameter. In Proc. COST-G6 Conference on Digital Audio Effects

(DAFx'00),

pages 25-30, 2000.

-

80

-

S. Serafin, R. Dudas, M. M. Wanderley, and X. Rodet. Gestural Control

of a Real-Time Physical Model of a Bowed String Instrument. In Proc.

of the 1999 International Computer Music Conference. San Francisco, Calif.:

International Computer Music Association, pages 375-378, 1999.

-

81

-

T. Sheridan. Musings on Music Making and Listening: Supervisory

Control

and Virtual Reality. In Human Supervision and Control in Engineering

and Music Workshop, September 2001. Kassel, Germany.

-

82

-

J. O. Smith.

Musical Signal Processing, chapter Acoustic Modeling

using Digital Waveguides, pages 221-263. Swets & Zeitlingler, Lisse,

The Netherlands, 1997.

-

83

-

R. L. Smith.

The Electrical Engineering Handbook, chapter

Sensors. CRC Press, 1993.

-

84

-

M. Starkier and P. Prevot. Real-Time Gestural Control. In Proc.

of the 1986 International Computer Music Conference. San Francisco, Calif.:

International Computer Music Association, pages 423-426, 1986.

-

85

-

A. Tanaka. Musical Technical Issues in Using Interactive Instrument

Technology with Applications to the BioMuse. In Proc. of the 1993

International

Computer Music Conference. San Francisco, Calif.: International Computer

Music Association, pages 124-126, 1993.

-

86

-

A. Tanaka.

Trends in Gestural Control of Music, chapter Musical

Performance Practice on Sensor-based Instruments. Ircam - Centre Pompidou,

2000.

-

87

-

T. Ungvary and R. Vertegaal.

Trends in Gestural Control of Music,

chapter Cognition and Physicality in Musical Cyberinstruments. Ircam -

Centre Georges Pompidou, 2000.

-

88

-

V. Välimäki and T. Takala. Virtual Musical Instruments

- natural sound using physical models.

Organised Sound, 1(2):75-86,

1996.

-

89

-

C. Vergez.

Trompette et trompettiste: un système dynamique

non lineaire analysé, modelisé et simulé dans un

contexte

musical. PhD thesis, Ircam - Université Paris VI, 2000.

-

90

-

R. Vertegaal. An Evaluation of Input Devices for Timbre Space

Navigation.

Master's thesis, Department of Computing - University of Bradford,

1994.

-

91

-

R. Vertegaal and E. Bonis. ISEE: An Intuitive Sound Editing

Environment.

Computer

Music J., 18(2):12-29, 1994.

-

92

-

R. Vertegaal and B. Eaglestone. Comparison of Input Devices in an

ISEE Direct Timbre Manipulation Task.

Interacting with Computers,

8(1):13-30, 1996.

-

93

-

R. Vertegaal, T. Ungvary, and M. Kieslinger. Towards a Musician's

Cockpit: Transducer, Feedback and Musical Function. In Proc. of the

1996 International Computer Music Conference. San Francisco, Calif.:

International

Computer Music Association, pages 308-311, 1996.

-

94

-

M. Waisvisz.

Trends in Gestural Control of Music, chapter

Round Table. Ircam - Centre Pompidou, 2000.

-

95

-

M. Waiswisz. The Hands, a Set of Remote MIDI-Controllers. In Proc.

of the 1985 International Computer Music Conference. San Francisco, Calif.:

International Computer Music Association, pages 313-318, 1985.

-

96

-

M. M. Wanderley.

Performer-Instrument Interaction: Applications

to Gestural Control of Sound Synthesis. PhD thesis, Université

Pierre et Marie Curie - Paris VI, 2001.

-

97

-

M. M. Wanderley and M. Battier, editors.

Trends in Gestural Control

of Music. Ircam - Centre Pompidou, 2000.

-

98

-

M. M. Wanderley and P. Depalle.

Interfaces Homme-Machine et Création

Musicale, chapter Contrôle Gestuel de la Synthèse Sonore,

pages 145-163. Hermes Science Publications, 1999.

-

99

-

M. M. Wanderley, P. Depalle, and O. Warusfel. Improving Instrumental

Sound Synthesis by Modeling the Effects of Performer Gesture. In Proc.

of the 1999 International Computer Music Conference. San Francisco, Calif.:

International Computer Music Association, pages 418-421, 1999.

-

100

-

M. M. Wanderley, N. Orio, and N. Schnell. Towards an Analysis of

Interaction in Sound Generating Systems. In Proc. of the International

Symposium on Electronic Arts - ISEA2000, Paris - France, 2000.

-

101

-

M. M. Wanderley, N. Schnell, and J. Rovan. Escher - Modeling and

Performing "Composed Instruments" in Real-time. In Proc. IEEE

International

Conference on Systems, Man and Cybernetics (SMC'98), 1998.

-

102

-

M. M. Wanderley, J. P. Viollet, F. Isart, and X. Rodet. On the Choice

of Transducer Technologies for Specific Musical Functions. In Proc.

of the 2000 International Computer Music Conference. San Francisco, Calif.:

International Computer Music Association, pages 244-247, 2000.

-

103

-

D. Wessel. Timbre Space as a Musical Control Structure.

Computer

Music Journal, 3(2):45-52, 1979.

-

104

-

D. Wessel and M. Wright. Problems and Prospects for Intimate Musical

Control of Computers. In New Interfaces for Musical Expression - NIME

Workshop, 2001.

-

105

-

A. Willier and C. Marque. Juggling Gesture Analysis for Music

Control.

In IV Gesture Workshop, 2001. London, England.

-

106

-

T. Winkler. Making Motion Musical: Gestural Mapping Strategies for

Interactive Computer Music. In Proc. of the 1995 International Computer

Music Conference. San Francisco, Calif.: International Computer Music

Association,

pages 261-264, 1995.

-

107

-

S. Ystad.

Sound Modeling using a Combination of Physical and Signal

Models. PhD thesis, Université Aix-Marseille II, 1998.

Notes

-

...Wanderley

-

Current address: Faculty of Music - McGill University, 555, Sherbrooke

Street West, Montreal, Quebec - H3A 1E3 Canada

-

...manipulation

-

With the exception of extreme conditions, such as 3-dimensional whole-body

acquisition in large spaces

-

...non-musical

-

For a survey on haptic devices, check the Haptics Community Web page at:

http://haptic.mech.mwu.edu/

-

...[12]

-

Even so, many users still use the traditional piano-like keyboard as the

main input device for musical interaction. This situation seems to be

equivalent

to the ubiquitous role played by the mouse and keyboard in traditional

human-computer interaction (HCI) .

-

...interaction

-

For general information on human-computer interaction, the reader is

directed

to general textbooks, such as [25] or to

the ACM SIGCHI webpage at: http://www.acm.org/sigchi/

-

...effects

-

Several papers on the control of digital audio effects are available from

the DAFx site, at:

http://echo.gaps.ssr.upm.es/COSTG6/goals.php3

-

...contexts

-

Sometimes called metaphors for musical control [104].

-

...music

-

A detailed analysis of different interaction contexts has been proposed

in [100], taking into account two points

of view: system design (engineering) and semantical (human-computer

interaction).

-

...interaction

-

Due to space constraints, other important and interesting modalities of

human-computer interaction in music will not be studied here. An electronic

publication (CDROM), Trends in Gestural Control of Music

, co-edited by the author and by M. Battier [97],

may present useful guidelines for the study of other modalities not

discussed

here.

-

...NAME="158">

-

The term digital musical instrument [2]

will be used instead of virtual musical instrument - VMI

[57] due to the various meanings of

VMI, such as for example in the software package Modalys, where

a software-defined instrument is called a virtual instrument, without

necessarily

the use of an input device. This is also the common usage of the term in

the field of physical modeling [88].

On the other hand, various authors consider a VMI as both the synthesis

algorithm and the input device [56]

[24] [35],

although in this case the digital musical instrument is eventually much

more real or tangible and less virtual.

-

...controller

-

The term gestural controller is use here meaning input device

for musical control.

-

...algorithm

-

Details of each module of figure 1 will

be considered in the rest of this document.

-

...[14]

-

I will use the term performer gesture throughout this document

meaning

both actions such as prehension and manipulation, and non-contact

movements.

-

...differences

-

I have developed an extended comparison of nine commercially available

models, including technical characteristics and general features, based

on information provided from manufacturers and/or owner

manuals/manufacturer's

web pages. This comparison is available from the Gesture Research in

Music home-page at http://www.ircam.fr/gesture

-

...controller

-

Called input device in traditional human-computer interaction.

-

...controller

-

According to A. Mulder , a virtual musical

instrument

(here called digital musical instrument) is ideally capable of

capturing any gesture from the universe of all possible human movements

and use them to produce any audible sound [56].

-

...instrument

-

This fact can be modified by the use of different mapping strategies, as

shown in [76].

-

...designs

-

A similar situation occurs in others areas, such as haptic devices [36].

-

...[58]

-

On the other hand, other authors claim that effort demanding and

hard-to-play

instruments are the only ones that provide expressive possibilities to

a performer [77] [78]

[94].

-

...goals

-

A description of several input device designs is proposed in [7],

where Bongers review his work at STEIM

, the Institute of Sonology (Den Haag) and in the

Royal Academy of Arts in Amsterdam. Another good review of different

controllers

has been presented by J. Paradiso in [63]

-

...EMF

-

http://www.notam.uio.no/icma/interactivesystems/wg.html

About this document ...

Gestural Control of Music

This document was generated using the LaTeX2HTML

translator Version 96.1-f (May 31, 1996) Copyright © 1993, 1994, 1995,

1996, Nikos

Drakos,

Computer Based Learning Unit, University of Leeds.

The command line arguments were:

latex2html -split 0 kassel.tex.

The translation was initiated by Marcelo Wanderley on Sun Aug 26

16:33:41 MET DST 2001

Marcelo Wanderley

Sun Aug 26 16:33:41 MET DST 2001

.

Specifically regarding manipulation, tactile and force feedback devices

for both non-musical

.

Specifically regarding manipulation, tactile and force feedback devices

for both non-musical and musical contexts have already been proposed [12]

and musical contexts have already been proposed [12] .

.

.

In this context, various questions come to mind, such as:

.

In this context, various questions come to mind, such as:

in computer music

in computer music .

.

or sound spatialisation.

or sound spatialisation. .

The focus of this work can be summarized as expert interaction by means

of the use of input devices to control real-time sound synthesis

software.

.

The focus of this work can be summarized as expert interaction by means

of the use of input devices to control real-time sound synthesis

software.

is used to represent an instrument that contains a separate gestural

interface

(or gestural controller unit) from a sound generation unit. Both units

are independent and related by mapping strategies [34]

[56] [76]

[79]. This is shown in figure 1.

is used to represent an instrument that contains a separate gestural

interface

(or gestural controller unit) from a sound generation unit. Both units

are independent and related by mapping strategies [34]

[56] [76]

[79]. This is shown in figure 1.

can be defined here as the input part of the DMI, where physical

interaction

with the player takes place. Conversely, the sound generation unit can

be seen as the synthesis algorithm and its controls. The mapping layer

refers to the liaison strategies between the outputs of the gestural

controller

and the input controls of the synthesis algorithm

can be defined here as the input part of the DMI, where physical

interaction

with the player takes place. Conversely, the sound generation unit can

be seen as the synthesis algorithm and its controls. The mapping layer

refers to the liaison strategies between the outputs of the gestural

controller

and the input controls of the synthesis algorithm .

.

.

.

.

Their basic characteristics are seen in table 1.

.

Their basic characteristics are seen in table 1.

.

.

and depending on the interaction context where it will be used (cf. section

1.1.1),

its design may vary from case to case. In order to analyze the various

possibilities, we propose a three-tier classification of existing

controller

designs as [98] [7]:

and depending on the interaction context where it will be used (cf. section

1.1.1),

its design may vary from case to case. In order to analyze the various

possibilities, we propose a three-tier classification of existing

controller

designs as [98] [7]:

,

technical difficulties inherent to the former may have to be overcome by

the non-expert performer.

,

technical difficulties inherent to the former may have to be overcome by

the non-expert performer.

in order to evaluate their strong and weak points and eventually come up

with guidelines for the design of new input devices.

in order to evaluate their strong and weak points and eventually come up

with guidelines for the design of new input devices.

.

.

.

.

,

where a complete bibliography on the subject is available, as well as

suggestions

on basic readings and links to existing research.

,

where a complete bibliography on the subject is available, as well as

suggestions

on basic readings and links to existing research.