Morphing Musical Instrument Sounds Guided by High-Level Descriptors

Introduction

This page introduces briefly the model I developed during my PhD for Sound Morphing Guided by Descriptors. The model is intended to allow independent manipulation of perceptually related features of sounds captured by descriptors derived from perceptual studies. For such, we have proposed a model for each perceptually salient timbral feature along with a means to manipulate it guided by their descriptors values.

Sound morphing has been used in music compositions, in synthesizers, and even in psychoacoustic experiments, notably to study timbre spaces.

Harmonic Sinusoidal + Residual

The classical morphing technique uses the interpolation principle, which consists in interpolating the parameters of the model used to represent the sounds regardless of perceptual features. The basic idea behind the interpolation principle is that if we can represent different sounds by simply adjusting the parameters of a model, we should obtain a somewhat smooth transition between two (or more) sounds by interpolating between these parameters.

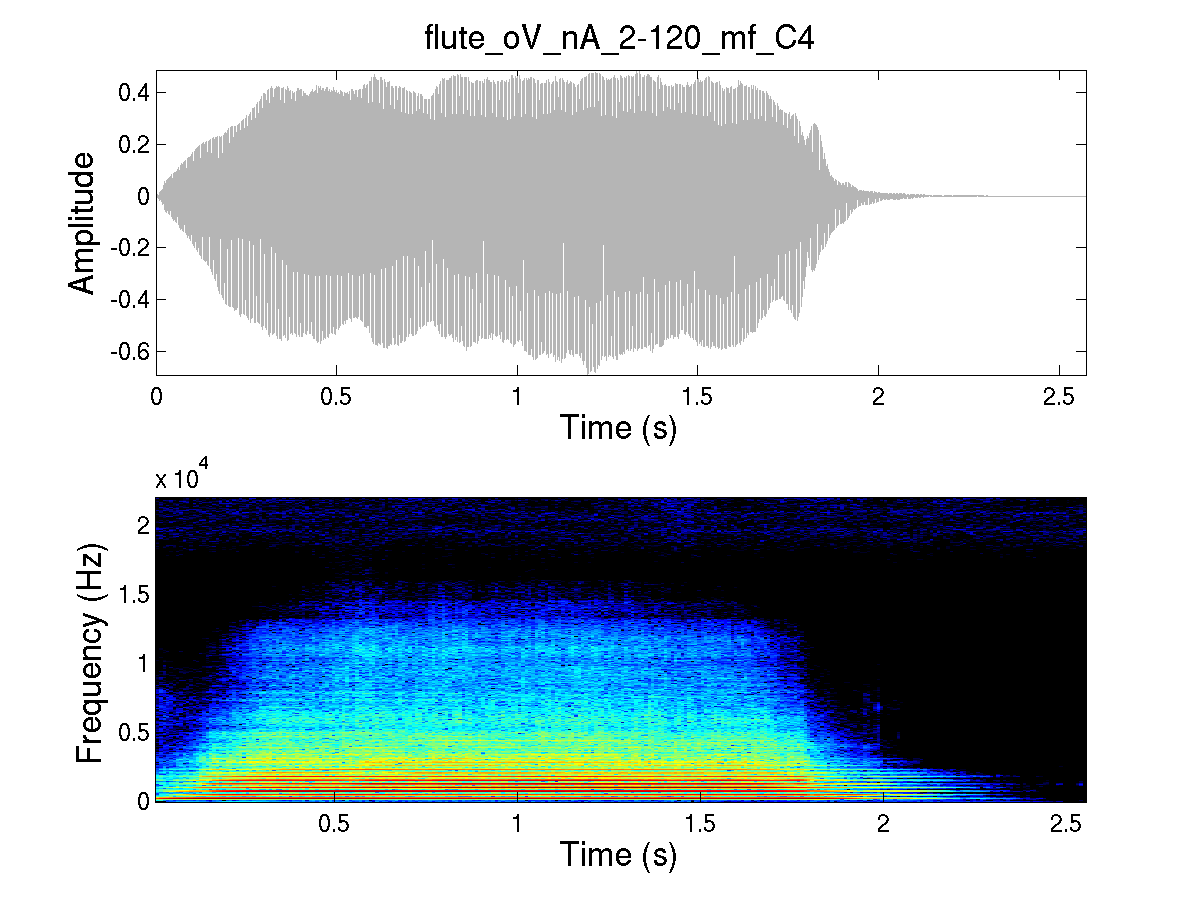

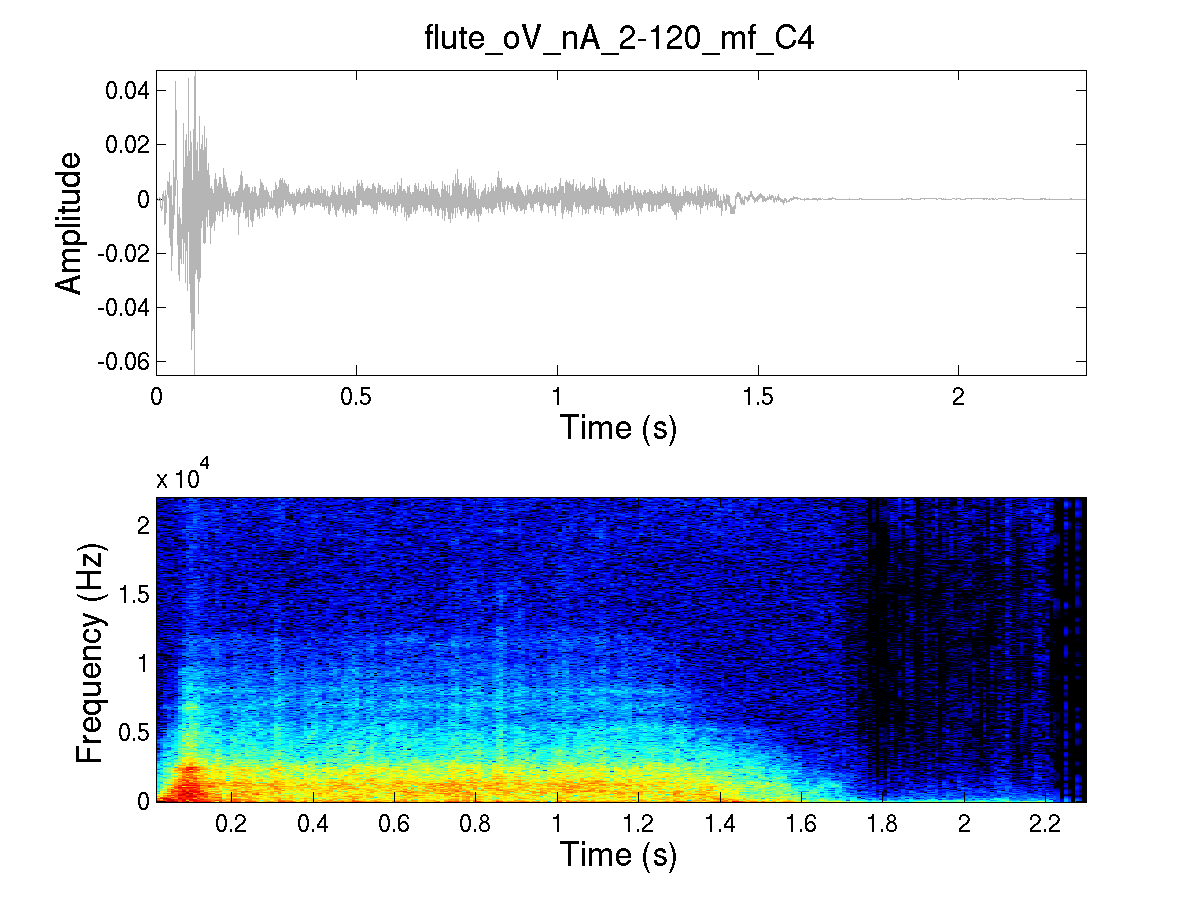

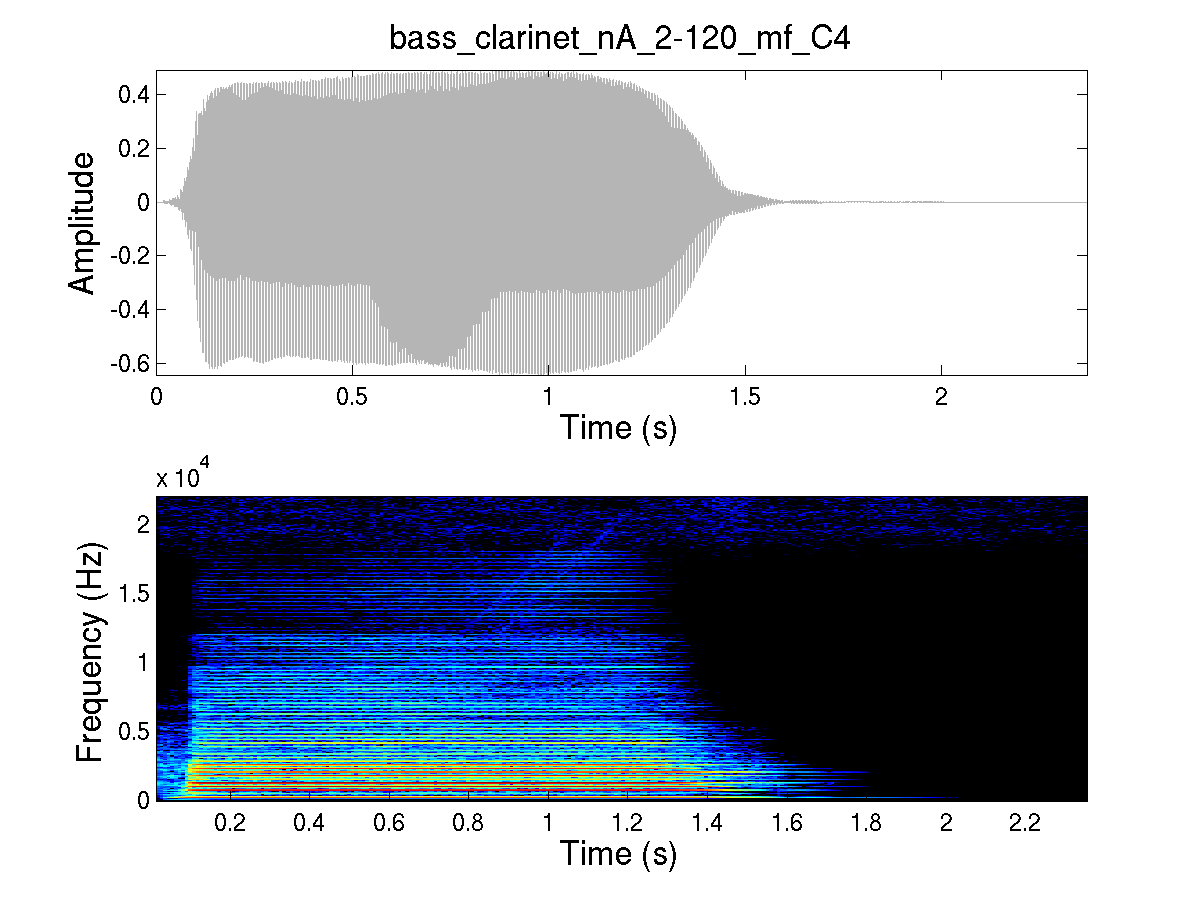

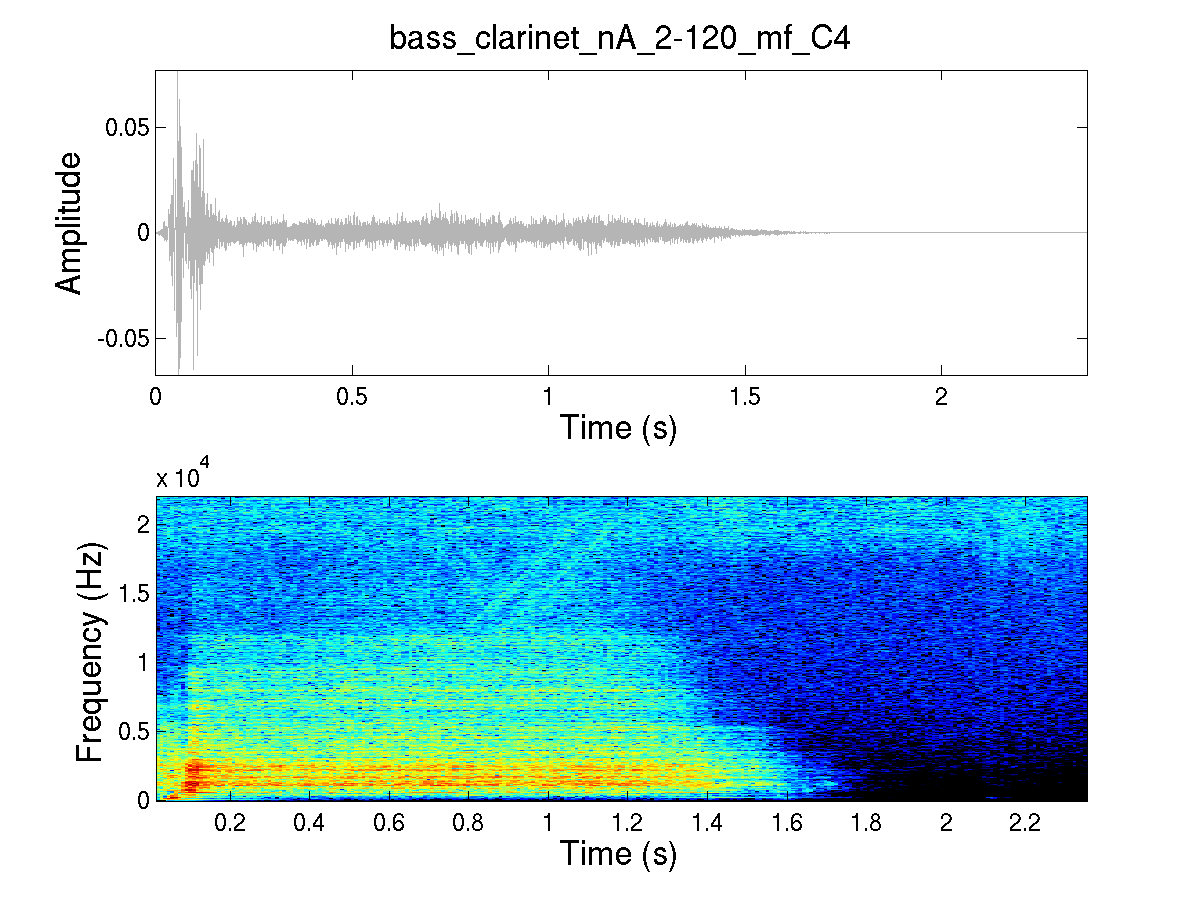

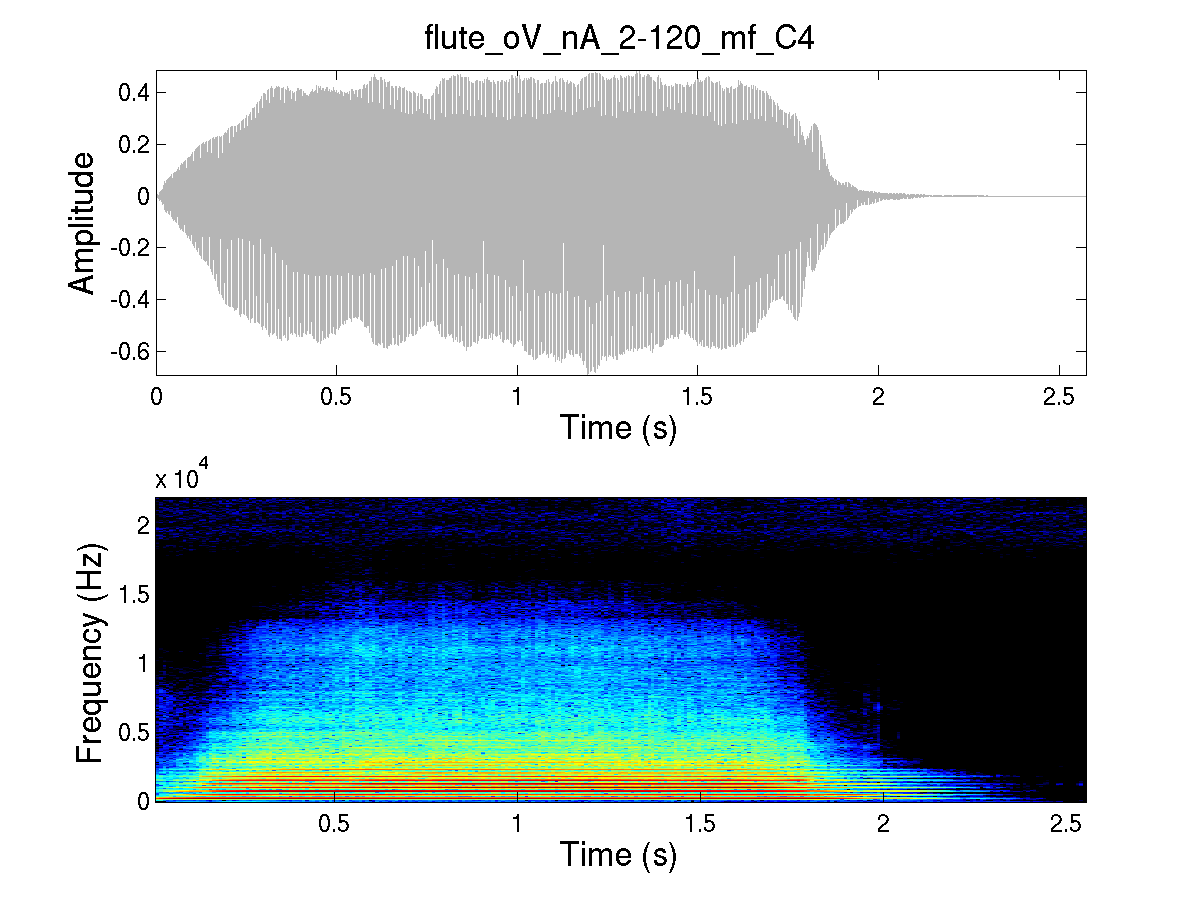

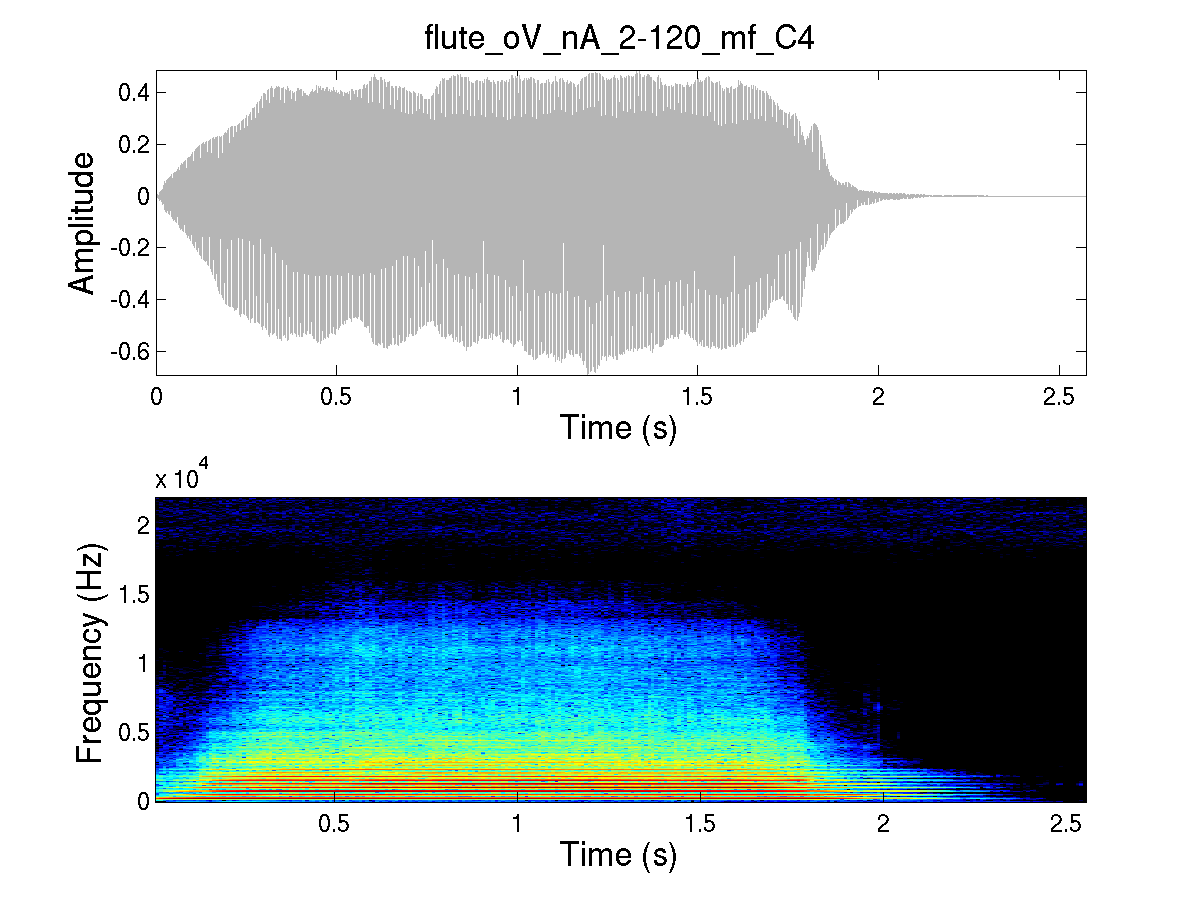

So it is very important to understand the basic representation of musical instrument sounds that we use in our model. We use a Harmonic Sinusoidal plus Residual representation, exemplified below. We show the waveform and the spectrogram for each musical instrument sound, a Flute and a Bass Clarinet. First the original sound is presented, followed by the Harmonic Sinusoidal and Residual parts.Flute

We should notice that the original flute sound is very noisy (due mostly to breathing) and that this particular characteristic becomes very clear when we represent the harmonic sinusoidal and residual parts separately. The Flute sound also presents a (comparably) slow attack, even for a wind instrument. Compare it with the Bass Clarinet sound, for example.

Original |

|

Harmonic Sinusoidal |

|

Residual |

|

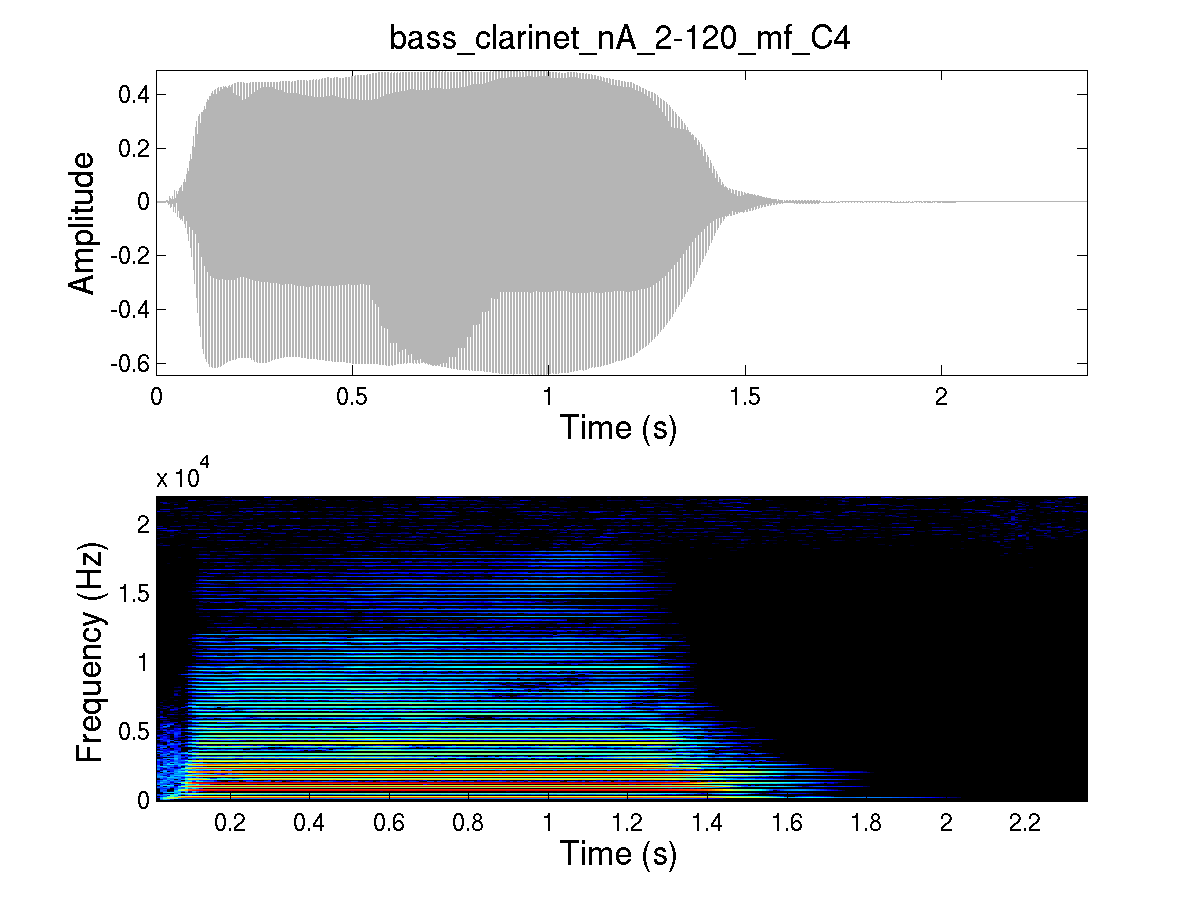

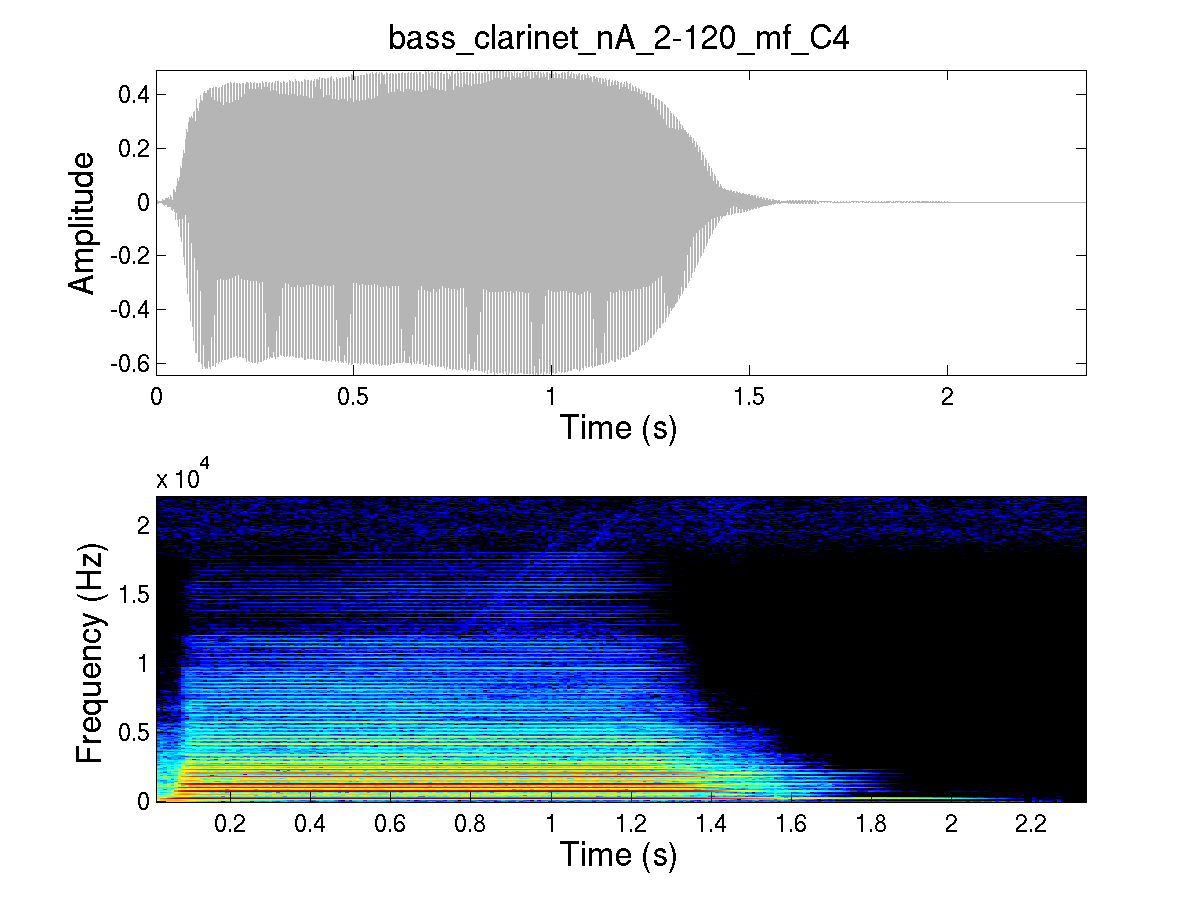

Bass Clarinet

The Bass Clarinet sound is less noisy than the Flute sound. Still, even visually it is clear how only the Harmonic Sinusoidal part is not enough to represent all the sound. When we listen to the Bass Clarinet, one interesting characteristic is the short attack when compared with the Flute sound previously presented.

Original |

|

Harmonic Sinusoidal |

|

Residual |

|

Temporal Modeling

The temporal modeling consists of two steps, estimation of the amplitude envelope and temporal segmentation. We are going to present each individually.

Amplitude Envelope Estimation

The estimation of the amplitude envelope is an important step in the model because the amplitude envelope is a perceptually important feature of musical instrument sounds and speech. The amplitude envelope has been shown to be correlated to the percussiveness of musical instruments sounds, to speech intelligibility, and even to affect pitch perception.

Ideally, the amplitude envelope should be a curve that outlines the waveform, following its general shape without representing information about the harmonic structure. One of the most challenging aspects of this problem is that we are looking for a curve that is smooth during rather stable regions of the waveform, while being able to react to sudden changes (such as percussive onsets) when they occur. I proposed a novel amplitude envelope estimation technique dubbed True Amplitude Envelope (TAE) that outperforms traditional methods such as low-pass filtering (LPF) and root-mean square (RMS) energy.

The figure below compares the amplitude envelopes obtained with four different methods, the three mentioned above and the recently proposed frequency-domain linear prediction (FDLP). In the figure we see the waveform of a sustained musical instrument and a plucked string with a sharp onset to highlight how the envelopes behave under these differences.

|

Temporal Segmentation

The temporal evolution of musical instrument sounds plays a conspicuous role on the perception of their most important features. Sound modeling and manipulation techniques could be greatly improved by the correct segmentation of musical instrument sounds taking into account the different characteristics of each perceptually different region. The morphing of musical instrument sounds (especially those based on different modes of excitation) could largely benefit from a more accurate model of temporal evolution.

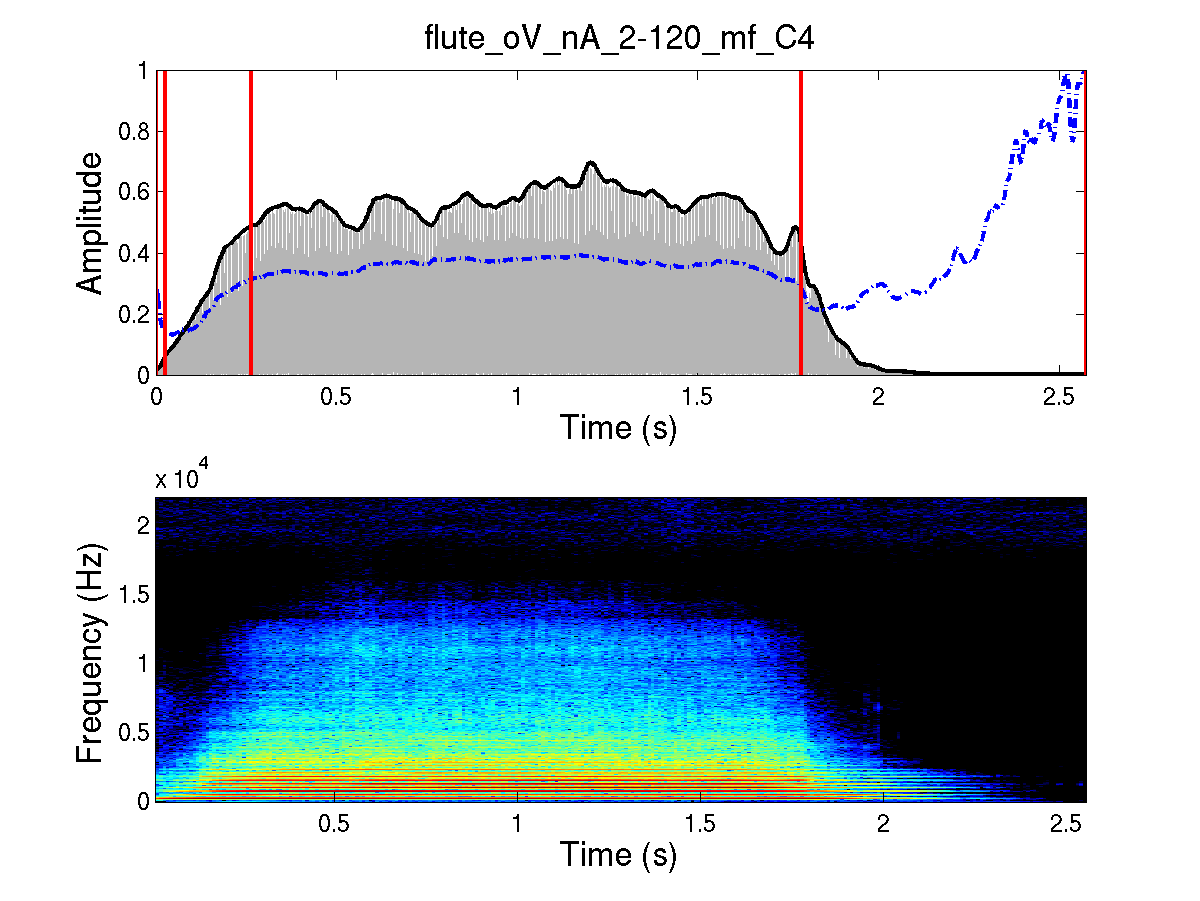

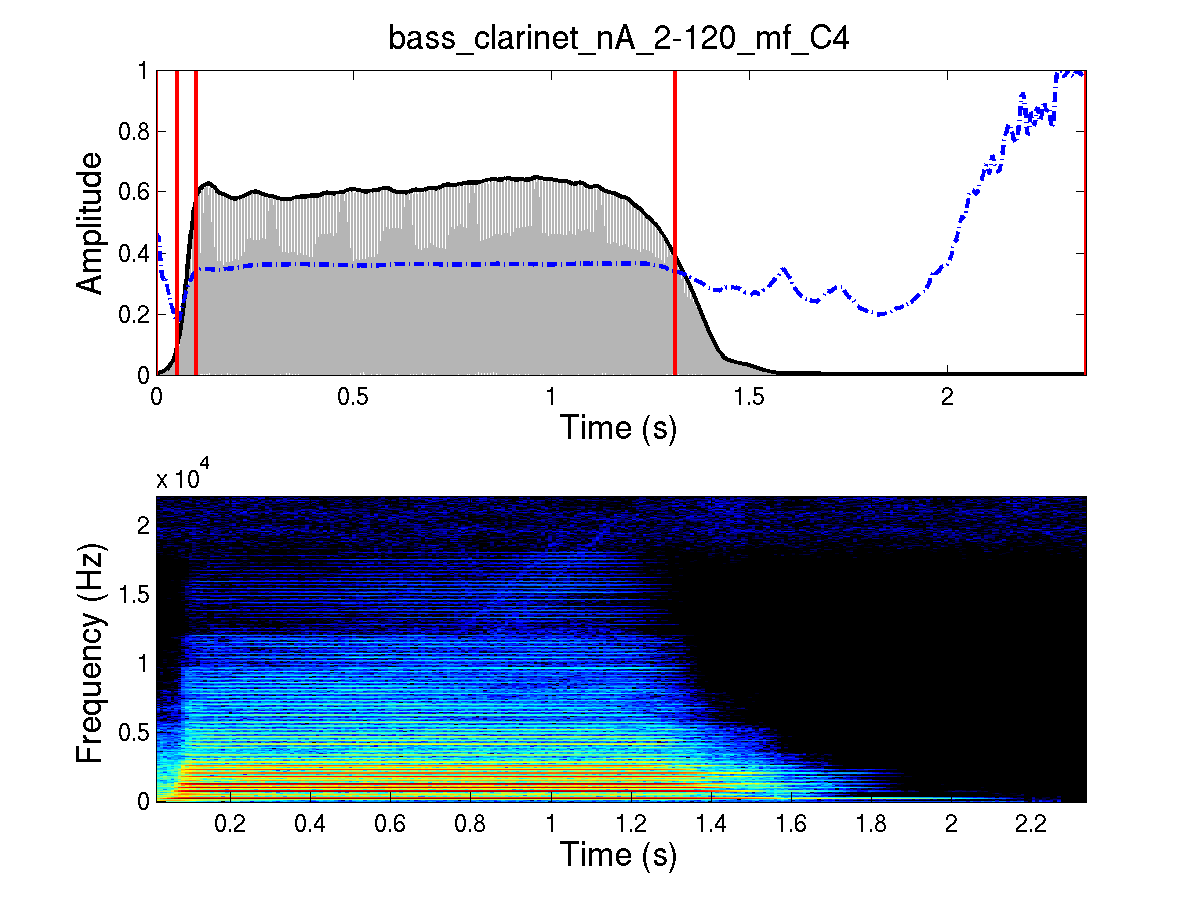

The automatic segmentation task consists in the detection of events such as onset, attack, decay, sustain, release, and offset. Recently, I proposed to use the amplitude/centroid trajectory (ACT) model for the automatic segmentation of isolated musical instrument sounds . The ACT model uses the relationship between the amplitude envelope and the temporal evolution of the spectral centroid to define the theoretical boundaries between perceptually salient segments of musical sounds.

The figure below exemplifies the results obtained. The red solid vertical lines are the boundaries of the five regions automatically detected by the method we propose in the article, and the dashed light grey lines represent the baseline method. At the bottom, we see the spectrogram of each sound to visually inspect the spectral evolution of the sounds. Click here to go to the page dedicated to the method and see several exemples.

|

|

Temporal Alignment

The first important step in morphing is the temporal alignment of perceptually different regions, such as the attack, characterized by fast transients, and the sustain part, much more stable. We cannot expect to attain good results if we combine a sound that has a long attack with another sound with a short one regardless of the differences. The region where attack transients are combined with more stable partials will not sound natural. With this in mind, to achieve a more perceptually seamless morph, we need to temporally align these regions so that their boundaries coincide. In order to do this, we need a model to correctly identify these regions and their boundaries. So I used the automatic segmentation based on the ACT method as explained above.

Once both sounds are correctly segmented, they are time stretched/compressed with independent factors for each segment so that the segments have the same duration for both sounds. Since the attack is known to be perceived roughly logarithmically, I use the log-attack time for the attack segment. Below we have an example of this temporal alignment procedure using the Flute and Bass Clarinet sounds we presented earlier.

First, play the original sound and the time-aligned version of the other sound. Compare carefully the attack, sustain, and release segments, as well as the overall duration. Now compare the time-aligned version with the original version to see how it sounds temporally different, even though it still contains the same spectral information.

Original Flute |

|

Bass Clarinet Time-Aligned with Original Flute |

|

Original Bass Clarinet |

|

Flute Time-Aligned with Original Bass Clarinet |

|

Sound Examples

These examples illustrate the sound morphing technique I developed based on the model described above. I will show a cyclostationary morphing between the source and target sounds. This means that you will hear the source sound followed by nine different hybrid sounds that drift towards the target and finally the target sound. The transformation should be perceived as perceptually linear.

Flute & Bass Clarinet

I chose this particular example to emphasize two perceptually important aspects of the model, namely the time alignment procedure and the residual modeling. The time alignment procedure allows to deal with the different temporal aspects of the sounds, such as duration, attack time, among others. Another important aspect of the model is the inclusion of a morphed residual that can be heard when morphing from the noisy flute sound to the clearer clarinet sound.

| Classical Interpolation of Sinusoidal Analysis |

Morphing by Feature Interpolation |

|

| From Flute to Bass Clarinet |

Now we are going to do the same for two very challenging sounds because they do not belong to the same category. The clavinet sound is a plucked string, and as such its attack is radically different from the other sound's, a tuba with an attack that slowly builds up.

Clavi & Tuba

Once again you will hear the source sound followed by nine different hybrid sounds that drift towards the target and finally the target sound. The transformation should be perceived as perceptually linear across all dimensions of timbre perception.

| Classical Interpolation of Sinusoidal Analysis |

Morphing by Feature Interpolation |

|

| From Tuba to Clavinet |