(In the forests of Nannup, Western Australia: photo courtesy of Mark Schneider)

I used to be a member of the Analysis/Synthesis Group at IRCAM. As my contract has concluded, my up to date research papers and results are now located at my personal research web site. I have left the rest of this site available as an archive. My IRCAM email address has been discontinued, so please visit my web site for contact details.

(In the forests of Nannup, Western Australia: photo courtesy of Mark Schneider)

Foot-tapping with Rubato

Foot-tapping with Rubato

This is an example of automatic interpretation of an anapest rhythm undergoing extreme asymmetrical rubato (tempo variation). The foot-tapper plays a hi-hat sound along to a test anapestic rhythm (repeated short-short-long) which is being varied in it's tempo. The tapper has found the underlying repetition rate and selectively chosen to tap on the first beat of the groups of three, respecting (with a slight error) the rubato of the rhythm. This gives a robust means to interpret and synthesize ritards, accelerando, grooves and swing.

Clapping to Auditory Salience Traces

Clapping to Auditory Salience Traces

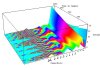

The continuous wavelet transform (CWT) of Morlet and Grossman can also be applied to decompose a rhythm represented by a continous trace of event "salience" derived directly from the audio signal. We use a measure of event salience developed by our EmCAP partners Prof. Sue Denham and Dr. Martin Coath at the University of Plymouth. The CWT decomposes the event salience trace into a hierarchy of periodicities (a multi-resolution representation). These periodicities have a limited duration in time (hence the term "wavelets"). Where those periodicities continue to be reinforced by the occurrance of each onset of the performed rhythm, a limited number of periodicities are continued over time, forming "ridges". The ridges can then be used to identify periods in the rhythm which match listeners sense of beat. These ridges can then be recomposed using the inverse wavelet transform to produce moments in time that match the taps of the beat.

An audio example below demonstrates beat induction of singing. The first MP3 file is the original sung fragment (Thanks are due to the Meertens Institute for supplying the Dutch folk songs from the "Onder de groene linde" collection). The second MP3 file is the same song with the beat induced by the multiresolution representation process mixed in as a hi-hat sound.

Singing Example

Singing Example

Accompanied Singing Example

Accompanied Singing Example

Evaluation of a Multiresolution Model of Musical Rhythm Expectancy on Expressive Performances

Evaluation of a Multiresolution Model of Musical Rhythm Expectancy on Expressive Performances

Leigh M. Smith

12th Rhythm Perception and Production

Workshop, poster presentation, 2009

(See Abstract)

A Multiresolution Model of Rhythmic Expectancy

A Multiresolution Model of Rhythmic Expectancy

Leigh M. Smith and Henkjan Honing

Proceedings of the Tenth International Conference on Music Perception and

Cognition, 2008 pages 360-365

(See Abstract)

Time-Frequency Representation of Musical Rhythm by Continuous Wavelets

Time-Frequency Representation of Musical Rhythm by Continuous Wavelets

Leigh M. Smith and Henkjan Honing

Journal of Mathematics and Music, 2(2), 2008 pages 81-97

(See Abstract)

Model Cortical Responses For The Detection Of Perceptual Onsets And Beat Tracking In Singing

Model Cortical Responses For The Detection Of Perceptual Onsets And Beat Tracking In SingingMartin Coath, Susan Denham, Leigh M. Smith, Henkjan Honing, Amaury Hazan, Piotr Holonowicz, Hendrik Purwins

Connection Science, 21(2 & 3), 2009 pages 193-205

(See Abstract)

Evaluation Of Multiresolution Representations Of Musical Rhythm

Evaluation Of Multiresolution Representations Of Musical Rhythm

Leigh M. Smith and Henkjan Honing

PDF, 4 pages, in

Proceedings of the

International Conference on Music Communication Science, Sydney, 2007,

(See Abstract)

Evaluating and Extending Computational Models of Rhythmic Syncopation in Music

Evaluating and Extending Computational Models of Rhythmic Syncopation in Music

Leigh M. Smith and Henkjan Honing

PDF, 4 pages, in

Proceedings of the 2006 International Computer

Music Conference, New Orleans, pages 688-91

(See Abstract)

Next Steps from NeXTSTEP: MusicKit and SoundKit in a New World

Next Steps from NeXTSTEP: MusicKit and SoundKit in a New World

Stephen Brandon and Leigh M. Smith

PDF, 4 pages, in

Proceedings of the 2000 International Computer

Music Conference, Berlin, pages 503-506

(See Abstract)

A Multiresolution Time-Frequency Analysis and Interpretation of

Musical Rhythm

A Multiresolution Time-Frequency Analysis and Interpretation of

Musical Rhythm

Leigh M. Smith

PDF, 191 pages,

UWA PhD Thesis, October 2000, Department of Computer Science,

University of Western Australia

(See Abstract)

Modelling Rhythm Perception by Continuous Time-Frequency Analysis

Modelling Rhythm Perception by Continuous Time-Frequency Analysis

Leigh M. Smith

PDF, 4 pages, in

Proceedings of the 1996 International Computer

Music Conference, Hong Kong, pages 392-5

(See Abstract)

A Continuous Time-Frequency Approach To Representing Rhythmic Strata

A Continuous Time-Frequency Approach To Representing Rhythmic Strata

Leigh M. Smith and Peter Kovesi

PDF, 6 pages, in

Proceedings of the Fourth International Conference on Music

Perception and Cognition, Montreal 1996, pages 197-202

(See Abstract).

Guest lecture for the UvA course “Music Cognition” (PDF 3.5Mb).

Guest lecture for Utrecht University Masters in Artificial Intelligence (PDF, 4.8Mb).

Guest lecture for Utrecht University seminar on Technology in Musicology (PDF, 5.5Mb).

A Common Lisp library of the Multiresolution Rhythm model of rhythm, syncopation and expectation representation as produced during the EmCAP project.

A numerical library for Common Lisp, incorporating plotting, array syntax and linkage to the Gnu Scientific Library, that I collaborate in development.

I maintain the MusicKit (originally written by David A. Jaffe and Julius Smith III) and SndKit, two Objective-C libraries for music and sound representation and low-latency performance.